Getting Started With pylon AI#

In order to use pylon AI, you have to install the following components:

- pylon Software Suite

- pylon Supplementary Package for pylon AI

The installation of the supplementary package is described in this topic. - You also need licenses for the vTools you want to use. For information how to obtain and activate these licenses, see the vTool Licensing topic.

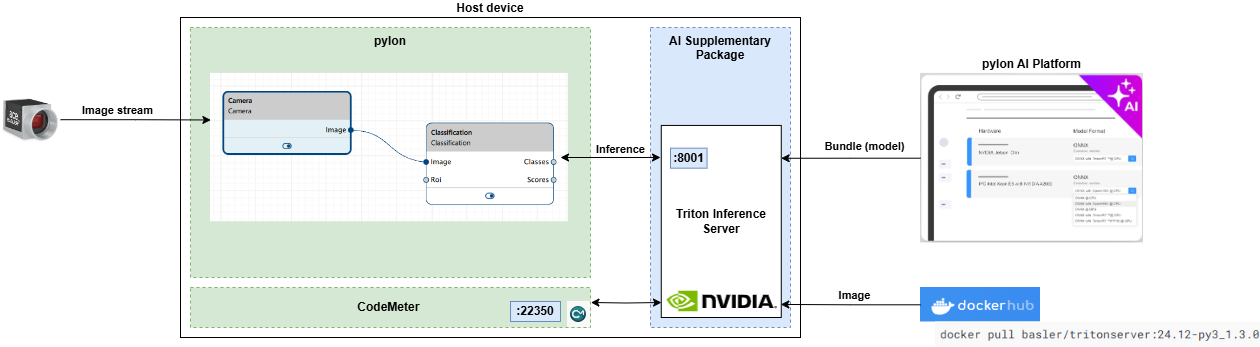

The pylon Supplementary Package for pylon AI delivers the backend service that allows the AI vTools to execute deep learning models. This backend service is the NVIDIA Triton Inference Server with Basler add-ons that support model encryption and licensing.

This is an overview of where the Triton Inference Server is placed in the development environment:

You have two options for installing the Triton Server:

- On a local computer

- On a remote computer in the network

The first option is recommended for working alone on your own projects while the second option is better suited when working in a team.

Basler doesn't provide live Triton server instances and doesn't recommend publishing the server as a public internet endpoint. You should always consider it as part of your development environment and protect it accordingly.

The pylon Supplementary Package for pylon AI includes a modified Docker image to prepare the Triton Inference Server for use with the pylon AI vTools and the pylon AI Agent. It also contains setup and launch scripts, as well as release notes and third-party licenses information.

システム要件#

Ubuntu and Ubuntu for WSL#

- x86_64 CPU:最新のIntel Core i5(またはそれ以降)または同等のもの。

- Memory: Minimum 8 GB of available RAM. 16 GB or more of available RAM are recommended.

- ディスクタイプ:SSDをお勧めします。

- Disk space: Minimum 20 GB of free disk space for installation. The space required for the model repository depends on the size and number of models you plan to deploy. Models can range from a few megabytes to several gigabytes each.

- CUDA version: 12+

- GPU cards: Examples of suitable cards include NVIDIA RTX A5000, NVIDIA RTX A2000 Laptop GPU, NVIDIA Tesla T4, etc.

情報

CPUs are sufficient for running the Triton Inference Server. If you require faster performance, using a GPU is recommended.

Jetson Family with JetPack 6#

- Modules: All NVIDIA Jetson

- Memory: Minimum 8 GB of available RAM. 16 GB or more of available RAM are recommended.

- ディスクタイプ:SSDをお勧めします。

- Disk space: Minimum 20 GB of free disk space for installation. The space required for the model repository depends on the size and number of models you plan to deploy. Models can range from a few megabytes to several gigabytes each.

前提条件#

情報

-

Internet connection is required during the installation process.

-

Make sure that the ports 8000, 8001, 8002, and 22350 are not blocked on your local computer.

Due to the variety and complexity of possible scenarios at the user's end, Basler is unable to provide a general solution. Therefore, potential access issues have to be handled by customers themselves with support from their IT departament.

Ubuntu#

For more information, see install docker on Ubuntu.

If your computer has an NVIDIA GPU, see Installing NVIDIA drivers and the NVIDIA Container Toolkit.

Ubuntu for WSL#

情報

Don't install any Linux display drivers inside WSL. Drivers should only be installed directly on Windows.

Follow these instructions to install Ubuntu OS on WSL.

Follow these instructions to install Docker within Ubuntu on WSL.

Jetson Family (JetPack 6.x)#

Your device must be flashed using SDK Manager. JetPack 6.x is required. This already includes Docker.

All OS Variants#

After you have prepared the operating system, make sure that the following requirements are fulfilled:

- CodeMeter Runtime is installed.

The installation of CodeMeter Runtime is part of the pylon Software Suite installation. - Licenses for AI vTools are available.

For more information, visit the Basler website. -

Docker Engine is installed.

To check whether you have Docker Engine installed: -

For GPU usage: NVIDIA Container Toolkit is installed.

To check whether you have this toolkit installed:If it hasn't been installed yet, read the following section.

Installing NVIDIA Drivers and the NVIDIA Container Toolkit (Required for GPU Usage)#

The NVIDIA Container Toolkit provides the libraries and tools required for GPU-accelerated computing. The Triton Inference Server uses CUDA to run GPU-accelerated operations, like model inference. The NVIDIA Container Toolkit version should match the version expected by the Triton Server and the models you are deploying.

To check the version of your NVIDIA drivers, open your terminal and type this command:

If no drivers or toolkit have been installed yet, follow the instructions on this page: https://docs.nvidia.com/datacenter/cloud-native/container-toolkit/latest/install-guide.html

Check this page to ensure that your NVIDIA driver is compatible with the NVIDIA Container Toolkit and the Triton Inference Server: https://docs.nvidia.com/deeplearning/triton-inference-server/release-notes/rel-24-11.html#rel-24-11

Installing the Triton Inference Server#

The model repository is created in your home directory as part of the installation, e.g., /home/username/pylon_ai/model_repository. You must not change it.

Online Installation#

To install the Triton Inference Server from the pylon Supplementary Package for pylon AI, follow these steps:

- Change to the directory that contains the pylon-supplementary-package-for-pylon-ai-*.tar.gz archive that you downloaded from the Basler website.

-

Extract the pylon Supplementary Package for pylon AI tar.gz archive into a directory of your choice (replace ./pylon_ai_setup if you want to extract into a different directory).

-

Switch to the folder into which you extracted the pylon Supplementary Package for pylon AI.

-

Run the setup script with root permissions.

Offline Installation#

In cases where internet connection is not available, follow these steps.

- On a computer with internet connection, visit dockerhub and download the Basler Triton Server Docker image.

The image is available under this link: https://hub.docker.com/repository/docker/basler/tritonserver/general -

Transfer the image on the computer on which you want to install the Triton Server.

You can use the following commands to perform step 1 and 2.例:

-

Change to the directory that contains the pylon-supplementary-package-for-pylon-ai-*.tar.gz archive that you downloaded from the Basler website.

-

Extract the pylon Supplementary Package for pylon AI tar.gz archive into a directory of your choice (replace ./pylon_ai_setup if you want to extract into a different directory).

-

Copy saved .tar file to the pylon_ai_setup folder.

-

Run the setup script with root permissions.

Launching the Triton Inference Server#

This section details the three different ways to start the Inference Server. Choose the one that best suits your needs bearing in mind the following information regarding running the server and vTools remotely.

When you launch the Inference Server, the server expects the model repository in the default location, i.e., /home/username/pylon_ai/model_repository. If you have accidentally renamed or deleted the repository, you must recreate it by reinstalling the server.

Running Server and vTools Remotely

If you're running the Triton Inference Server on a remote computer and are also running the AI vTools or AI Agent from another location, be aware of the following important restrictions and considerations:

- The time it takes for data to travel between the vTools making the inference request and the Triton Inference Server can impact the overall response time.

- High latency can slow down the inference process, especially for real-time applications.

- Limited network bandwidth can lead to slow or unreliable communication, particularly when transferring large amounts of data.

- Remote functionalities require ports 8000, 8001, and 8002 to be accessible. (This may require support from your IT department, e.g., running firewall and proxy checks.)

Launching the Server When Required#

If you don't want to launch the Triton Inference Server as a Docker container with the startup of your computer, you can use the following shell script to start the Triton Inference Server when required.

- Switch to the folder into which you extracted the pylon Supplementary Package for pylon AI.

-

Launch the Triton Inference Server.

Launching the Server on Boot#

To start the Triton Inference Server Docker container automatically when you boot your computer or restart it automatically in case of a crash, you can create a systemd service that meets your needs by running the "run-pylon-ai-triton-inference-server.sh" shell script with additional parameters.

- Switch to the folder into which you extracted the pylon Supplementary Package for pylon AI.

-

Launch installer script.

After you have installed the Triton Inference Server as a service, you can stop it temporarily when required. Bear in mind that it will be started automatically after a reboot of your device.

To temporarily stop the systemd service responsible for running the Triton Inference Server, open the terminal (in any directory) and run:

If you want to bring back the service, you have to start it by running:

If you're having issues with the currently running server managed by the service, you can restart it by running:

If you want to permanently disable the service, you can do this by running:

ライセンス#

You can find licensing information here or in the license file contained in the pylon Supplementary Package for pylon AI.

トラブルシューティング#

CodeMeter is configured during the pylon installation so that the Triton Inference Server can access the required licenses.

In rare cases, this may fail. If that happens, configure CodeMeter as a network server:

- Open CodeMeter Control Center and click the WebAdmin button at the bottom right.

- In CodeMeter WebAdmin, navigate to Configuration > Server > Server Access.

- In the Network Server area, select Enable and confirm with Apply.

- Return to the CodeMeter Control Center.

- In the Process menu, click Restart CodeMeter Service to restart CodeMeter as a network server.

Known Issues#

ONNX Runtime Error on Jetson Orin

A runtime error appears on Jetson Orin devices when executing the run-pylon-ai-triton-inference-server.sh command if the device is running in low power mode. The model you are trying to load, will still be loaded, however. To prevent this error, configure a higher power mode on the Jetson Orin.