pylon SDK Samples Manual#

Overview#

The pylon Software Suite includes an SDK with the following APIs:

- pylon Data Processing API for C++ (Windows, Linux)

- pylon API for C++ (Windows, Linux, and macOS)

- pylon API for C (Windows and Linux)

- pylon API for .NET languages, e.g., C# and VB.NET (Windows only)

Along with the APIs, the pylon Software Suite also includes a set of sample programs and documentation.

- On Windows operating systems, the source code for the samples can be found here:

<pylon installation directory>\Basler\pylon 7\Development\Samples

Example: C:\Program Files\Basler\pylon 7\Development\Samples - On Linux or macOS operating systems, the source code for the samples can be copied from the archive to any location on the target computer.

For more information about programming using the pylon API, see the pylon API Documentation section.

Data Processing API for C++ (Windows, Linux)#

Barcode#

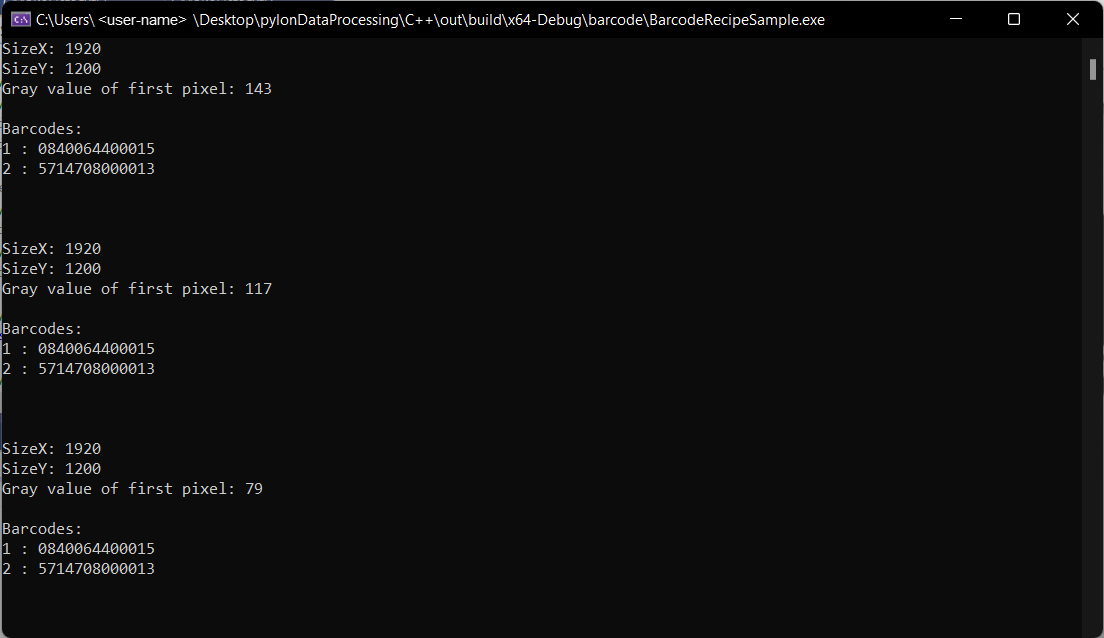

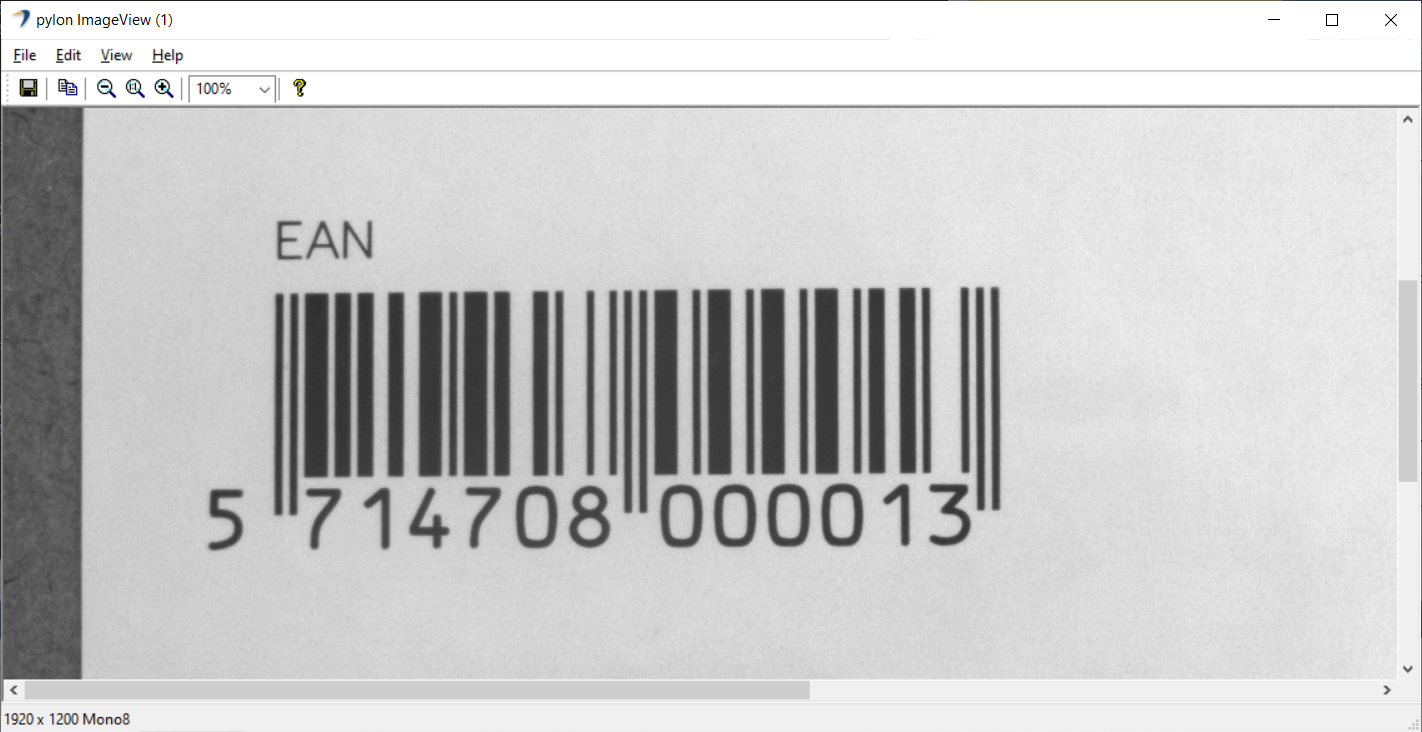

This sample demonstrates how to use the Barcode vTool. The Barcode vTool requires a valid evaluation license or runtime license.

This sample uses a predefined barcode.precipe file, the pylon camera emulation, and sample images to demonstrate reading of up to two barcodes of an EAN type.

Code#

The MyOutputObserver class is used to create a helper object that shows how to handle output data provided via the IOutputObserver::OutputDataPush interface method. Also, MyOutputObserver shows how a thread-safe queue can be implemented for later processing while pulling the output data.

The CRecipe class is used to create a recipe object representing a recipe file that is created using the pylon Viewer Workbench.

The recipe.Load() method is used to load a recipe file.

The recipe.PreAllocateResources() method allocates all needed resources, e.g., it opens the camera device and allocates buffers for grabbing.

The recipe.RegisterAllOutputsObserver() method is used to register the MyOutputObserver object, which is used for collecting the output data, e.g., barcodes.

The recipe.Stop() method is called to stop the processing.

Applicable Interfaces#

- GigE Vision

- USB3 Vision

- CXP

BuildersRecipe#

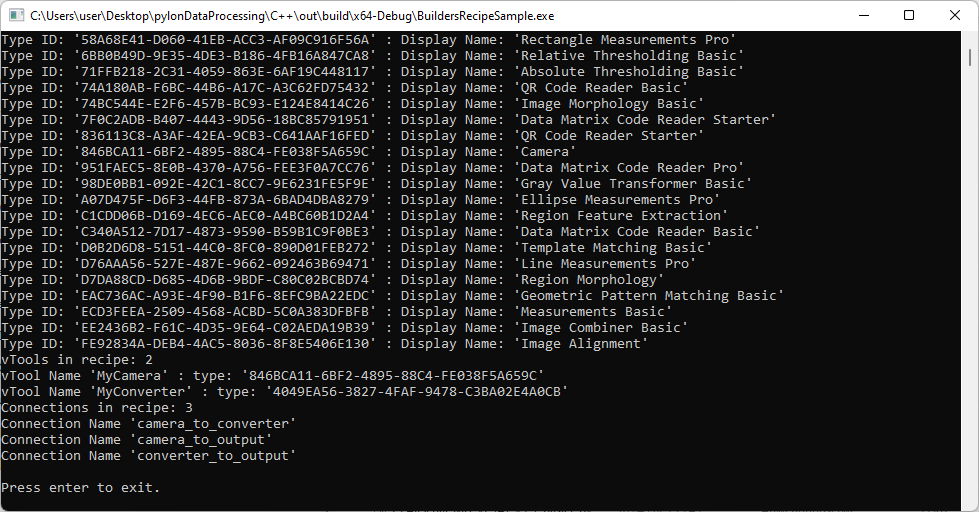

This sample demonstrates how to create and modify recipes programmatically.

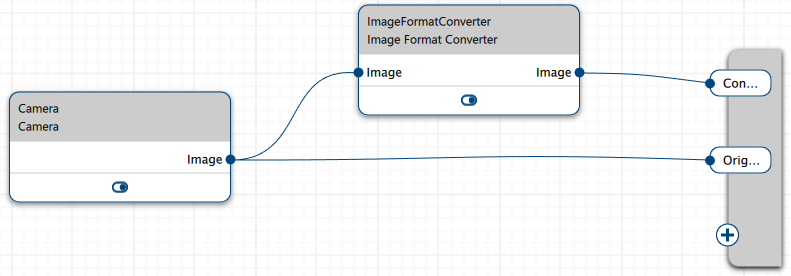

For demonstration purposes, this sample creates a recipe that contains a Camera vTool and an Image Format Converter vTool and sets up connections between the vTools and the recipe's output terminal.

Code#

The CBuildersRecipe class is used to query the type IDs of all vTool types available in your setup using the recipe.GetAvailableVToolTypeIDs() method. Using recipe.GetVToolDisplayNameForTypeID(), the vTools' display names are printed to the console.

The recipe.AddVTool() method is used to add vTools to the recipe with a string identifier using the vTool's type ID.

Info

While recipe.GetAvailableVToolTypeIDs() lists the type IDs of all vTools installed on your system, recipe.AddVTool() only allows you to add vTools for which you have the correct license. If you use recipe.AddVTool() with the type ID of a vTool without the correct license, the method will throw an exception.

The recipe.HasVTool() method can be used to check whether the recipe contains a vTool with a given identifier.

To get a list of the identifiers of all vTools in a recipe, the recipe.GetVToolIdentifiers() method is used.

The recipe.GetVToolTypeID() method is used to get the type ID of a vTool instance by its identifier.

The recipe.AddOutput() method is used to add two image outputs to the recipe.

The recipe.AddConnection() method is used to create the following connections:

- Connect the Image output of the Camera vTool to the Image input of the Image Format Converter vTool.

- Connect the Image output of the Camera vTool to an input of the recipe's output terminal called "OriginalImage".

- Connect the Image output of the Image Format Converter vTool to an input of the recipe's output terminal called "ConvertedImage".

The recipe.GetConnectionIdentifiers() method is used to get the identifiers of all connections in the recipe and to print them to the console.

The CBuildersRecipe class can be used like the CRecipe class to run the recipe that has been created.

The recipe.Start() method is called to start the processing.

The recipe.Stop() method is called to stop the processing.

Applicable Interfaces#

- GigE Vision

- USB3 Vision

- CXP

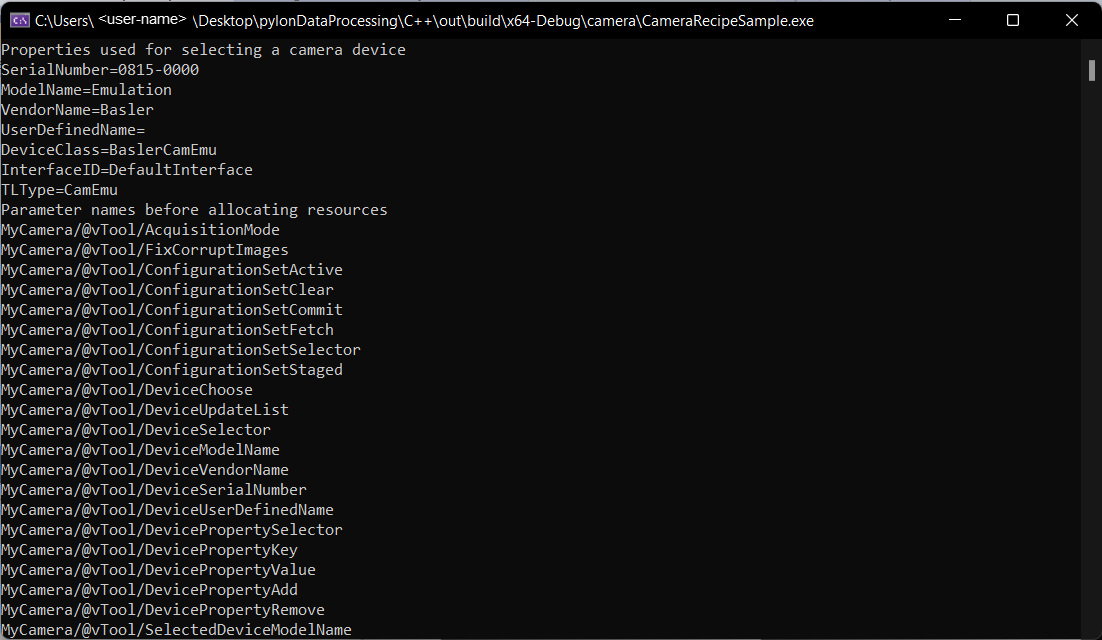

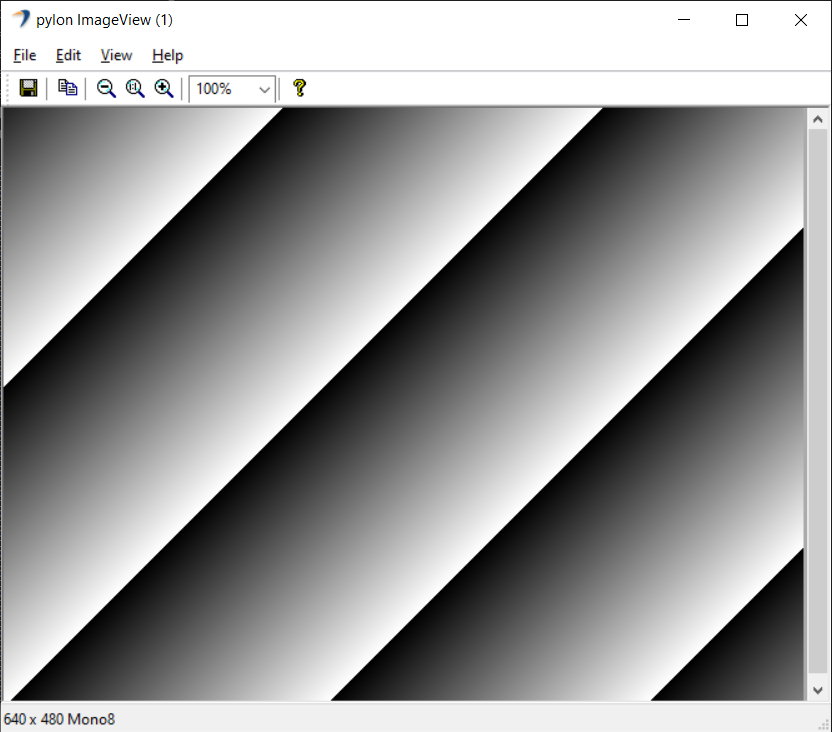

Camera#

This sample demonstrates how to use and parametrize the Camera vTool. The Camera vTool doesn't require a license.

This sample uses a predefined camera.precipe file and the pylon Camera Emulation for demonstration purposes.

Code#

The MyOutputObserver class is used to create a helper object that shows how to handle output data provided via the IOutputObserver::OutputDataPush interface method. Also, MyOutputObserver shows how a thread-safe queue can be implemented for later processing while pulling the output data.

The CRecipe class is used to create a recipe object that represents a recipe file created using the pylon Viewer Workbench.

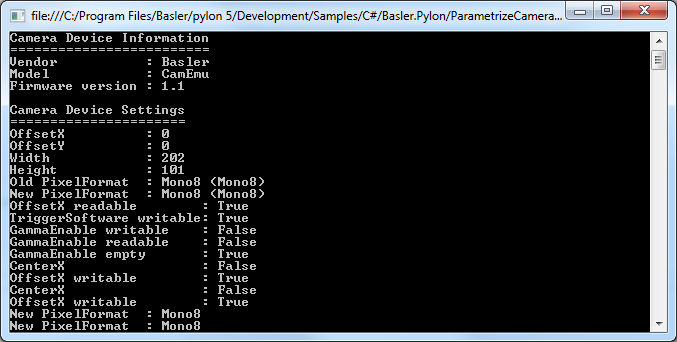

The recipe.Load() method is used to load a recipe file. After loading the recipe, some Pylon::CDeviceInfo properties of the camera are accessed and displayed on the console for demonstration purposes.

The recipe.PreAllocateResources() method allocates all needed resources, e.g., it opens the camera device and allocates buffers for grabbing. After opening the camera device, some camera parameters are read out and printed for demonstration purposes.

The recipe.RegisterAllOutputsObserver() method is used to register the MyOutputObserver object, which is used for collecting the output data, e.g., images.

The recipe.Stop() method is called to stop the processing.

The recipe.DeallocateResources() method is called to free all used resources.

Applicable Interfaces#

- GigE Vision

- USB3 Vision

- CXP

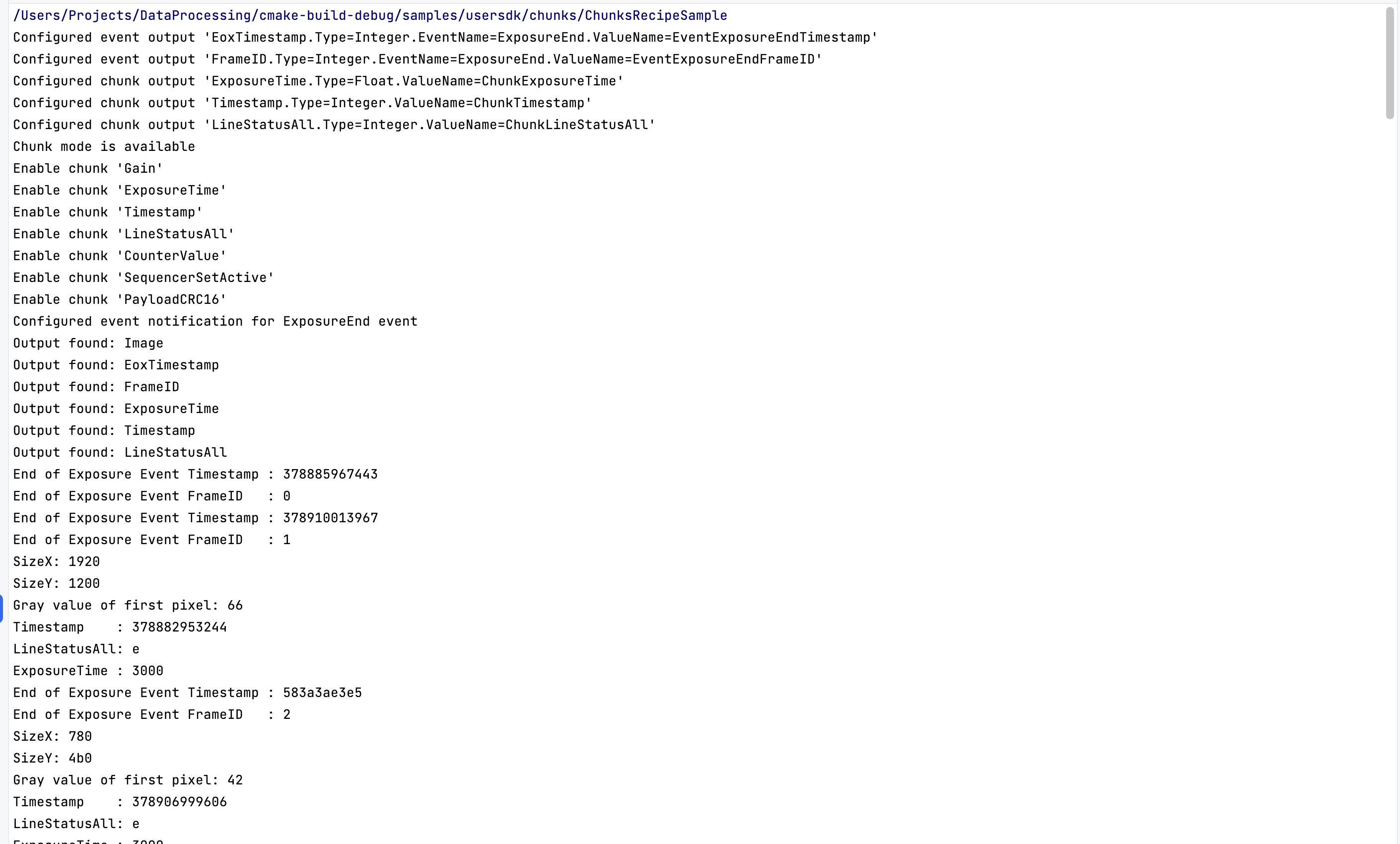

Chunks#

The Chunks and Events Sample demonstrates how turn on the Data Chunks and Event Notifications camera features in order to receive chunks and events from the Camera vTool and to output their data using dynamic pins. The Chunks and Events vTool doesn't require a license.

This sample extends the Camera sample.

Code#

"EoxTimestamp.Type=Integer.EventName=ExposureEnd.ValueName=EventExposureEndTimestamp",

"FrameID.Type=Integer.EventName=ExposureEnd.ValueName=EventExposureEndFrameID"

"ExposureTime.Type=Float.ValueName=ChunkExposureTime",

"Timestamp.Type=Integer.ValueName=ChunkTimestamp",

"LineStatusAll.Type=Integer.ValueName=ChunkLineStatusAll"

ValueName defines the feature to read. Type is the data type used for the output. The first item EoxTimeStamp in the example above, is the name of the pin.

Dynamic pins should be configured before allocating resources.

The camera is selected, and its node map is created when resources are allocated. Now, the camera has to be configured.

The sample program checks whether the camera selected supports chunk data. If so, the chunk data will be output together with the image data. Here, the chunks are selected in the node map one by one and are enabled. Depending on the camera model, different chunks are available.

Finally, the program tries to turn the generation of the Exposure End event on.

A generic output observer is attached to the recipe, and then the recipe is started. It runs until one hundred results (also called updates) have been collected and prints the values.

If chunks or events aren't supported, the program prints a warning message.

Applicable Interfaces#

- GigE Vision

- USB3 Vision

- CXP

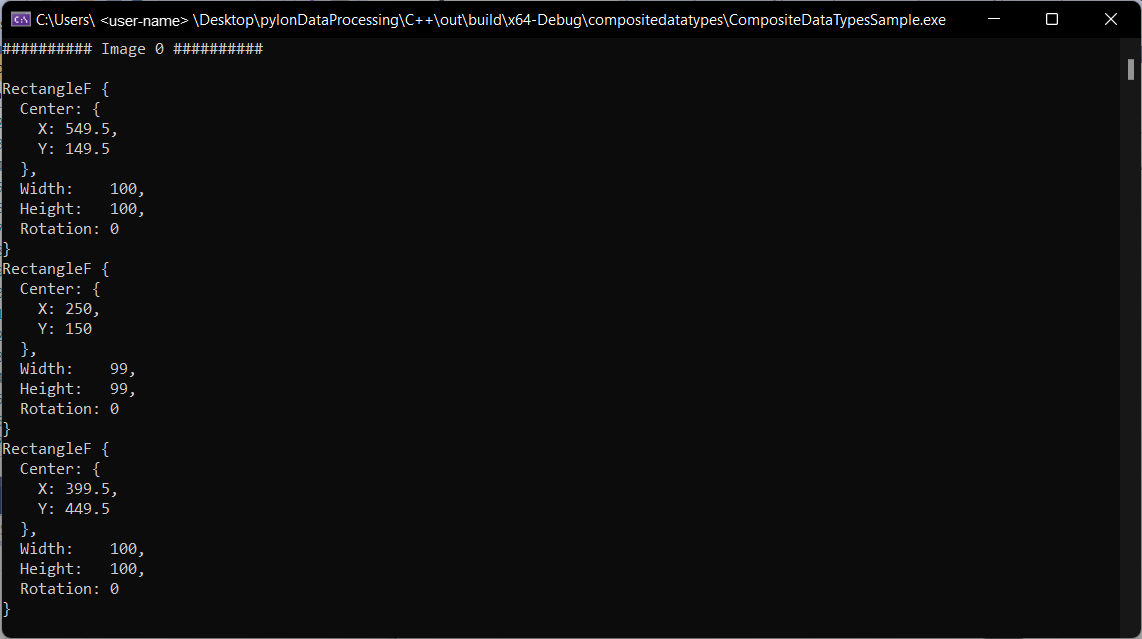

Composite Data Types#

This sample demonstrates how to use vTools that output composite data types, e.g., Absolute Thresholding, Region Morphology, Region Feature Extraction, etc. These vTools require a valid evaluation license or runtime license. Data types like PointF or RectangleF are composite data types.

This sample obtains information about the composition, e.g., RectangleF, and accesses the data, e.g., Center X, Center Y, Width, Height, and Rotation, by using a predefined composite_data_types.precipe file, the pylon camera emulation, and sample images. It depends on the input or output terminal pin(s), depending on whether image coordinates in pixels and world coordinates in meters are used.

Code#

The RectangleF struct in the ResultData class is used to store the data of the composite data type.

The MyOutputObserver class is used to create a helper object that shows how to handle output data provided via the IOutputObserver::OutputDataPush interface method. Also, MyOutputObserver shows how a thread-safe queue can be implemented for later processing while pulling the output data.

The CVariant and the GetSubValue() show how to access the data of composite data types of RectangleF.

The CRecipe class is used to create a recipe object that represents a recipe file created using the pylon Viewer Workbench.

The recipe.Load() method is used to load a recipe file.

The recipe.PreAllocateResources() method allocates all needed resources, e.g., it opens the camera device and allocates buffers for grabbing.

The recipe.RegisterAllOutputsObserver() method is used to register the MyOutputObserver object, which is used for collecting the output data, e.g., images and composite data types.

The recipe.Stop() method is called to stop the processing.

With the recipe.DeallocateResources() method you can free all used resources.

Applicable Interfaces#

- GigE Vision

- USB3 Vision

- CXP

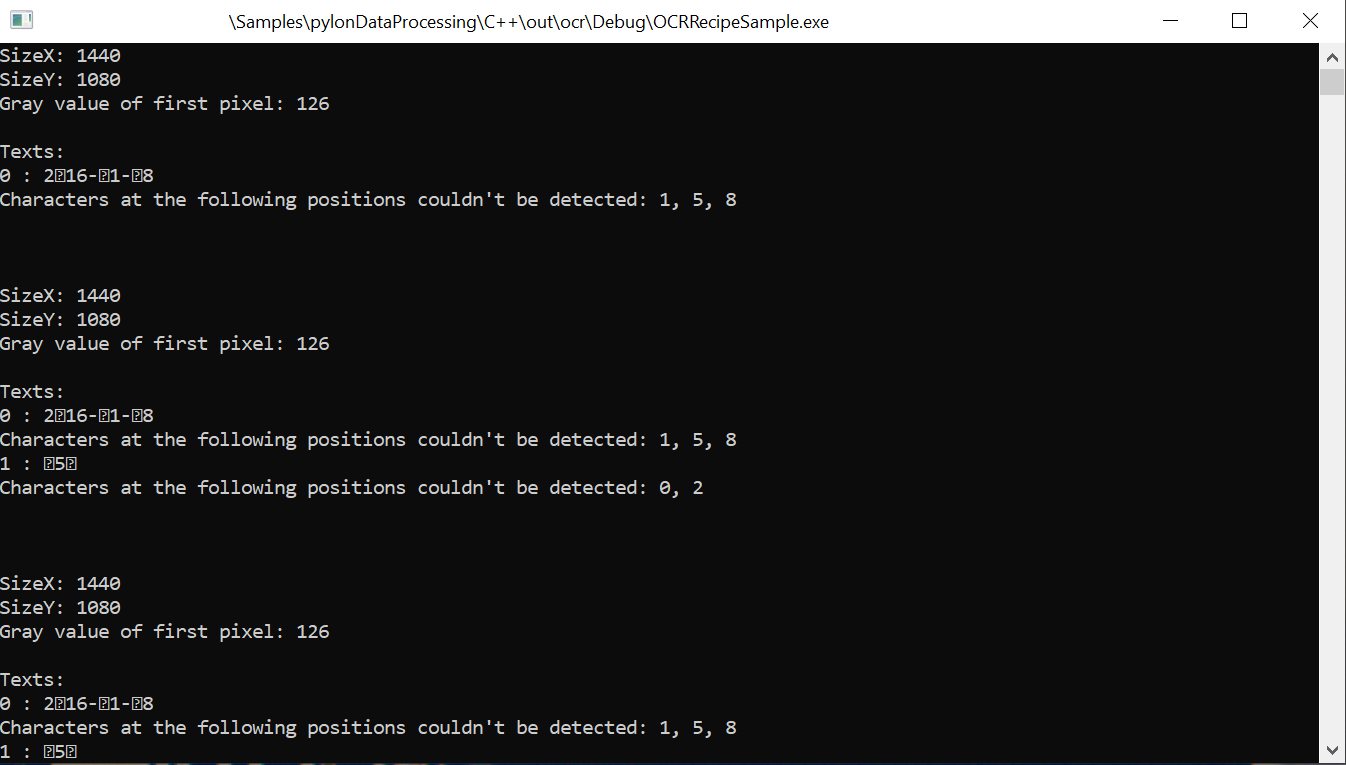

OCR#

This sample demonstrates how to use the OCR Basic vTool. The OCR Basic vTool requires a valid evaluation license or runtime license.

This sample uses a predefined ocr.precipe file as well as the Image Loading, Geometric Pattern Matching Basic, Image Alignment and OCR Basic vTools and sample images to demonstrate detecting characters in images.

Code#

The CGenericOutputObserver class is used to receive the output data from the recipe.

The CRecipe class is used to create a recipe object representing a recipe file that is created using the pylon Viewer Workbench.

The recipe.Load() method is used to load a recipe file.

The SourcePath parameter of the Image Loading vTool is set to provide the path to the sample images to the vTool.

The recipe.RegisterAllOutputsObserver() method is used to register the CGenericOutputObserver object, which is used for collecting the output data.

The recipe.Start() method is called to start the processing.

For each result received, the image dimensions and gray value of the first pixel are printed to the console.

Additionally, all detected characters are printed to the console. Characters that couldn't be detected are marked by the UTF-8 rejection character.

After half of the images have been processed, the character set of the OCR Basic vTool is changed to All. This results in all characters being detected.

The recipe.Stop() method is called to stop the processing.

Applicable Interfaces#

- GigE Vision

- USB3 Vision

- CXP

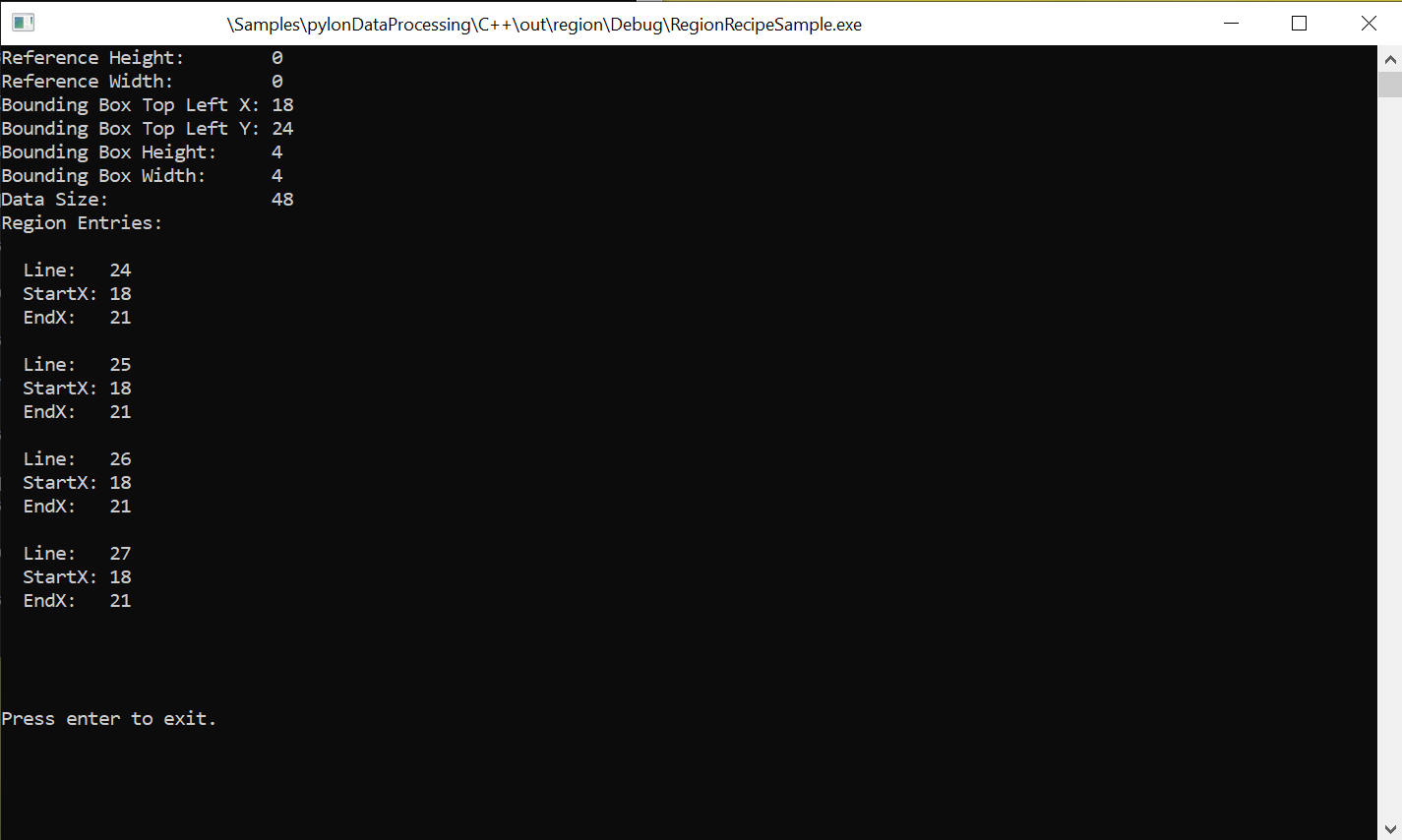

Region#

This sample demonstrates how to use the CRegion data type.

This sample uses a predefined region.precipe file and the Region Morphology vTool to demonstrate how to create CRegion objects and how to access their attributes.

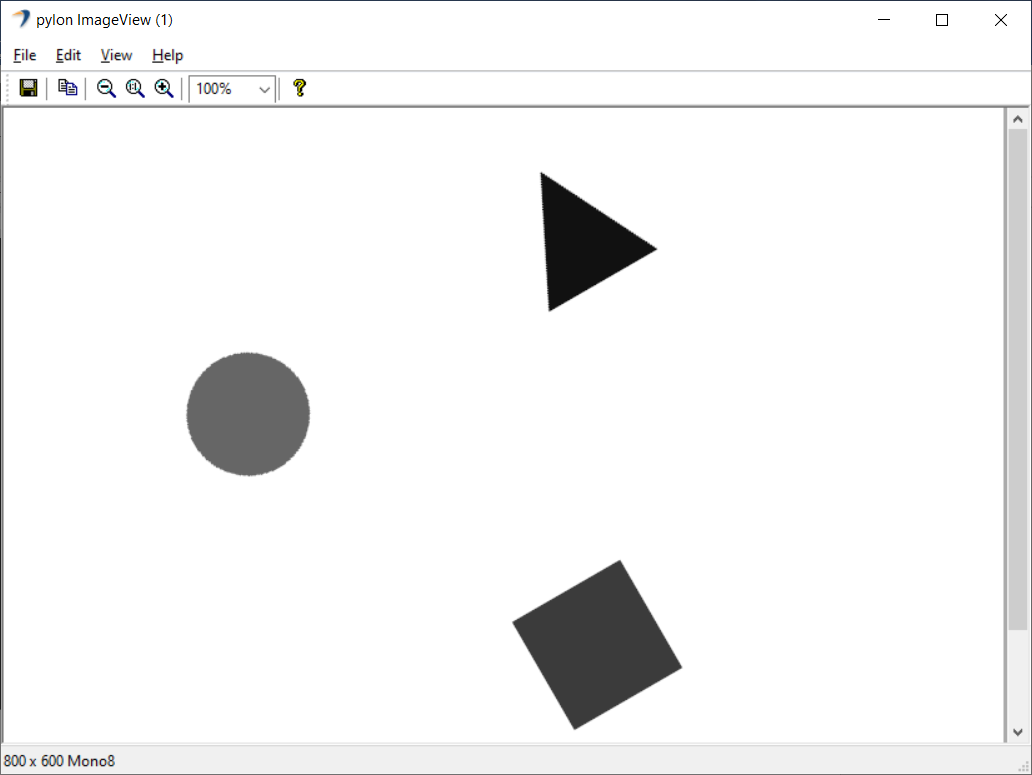

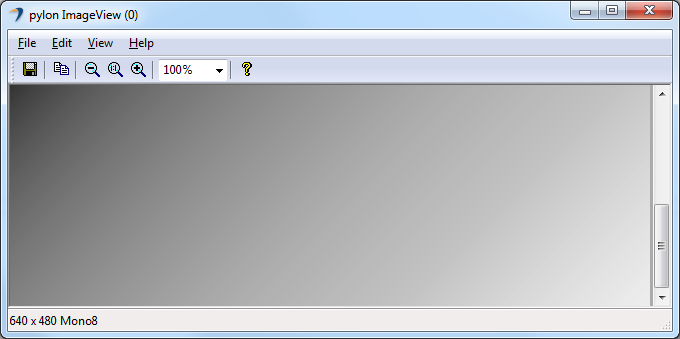

The following image shows the region created manually.

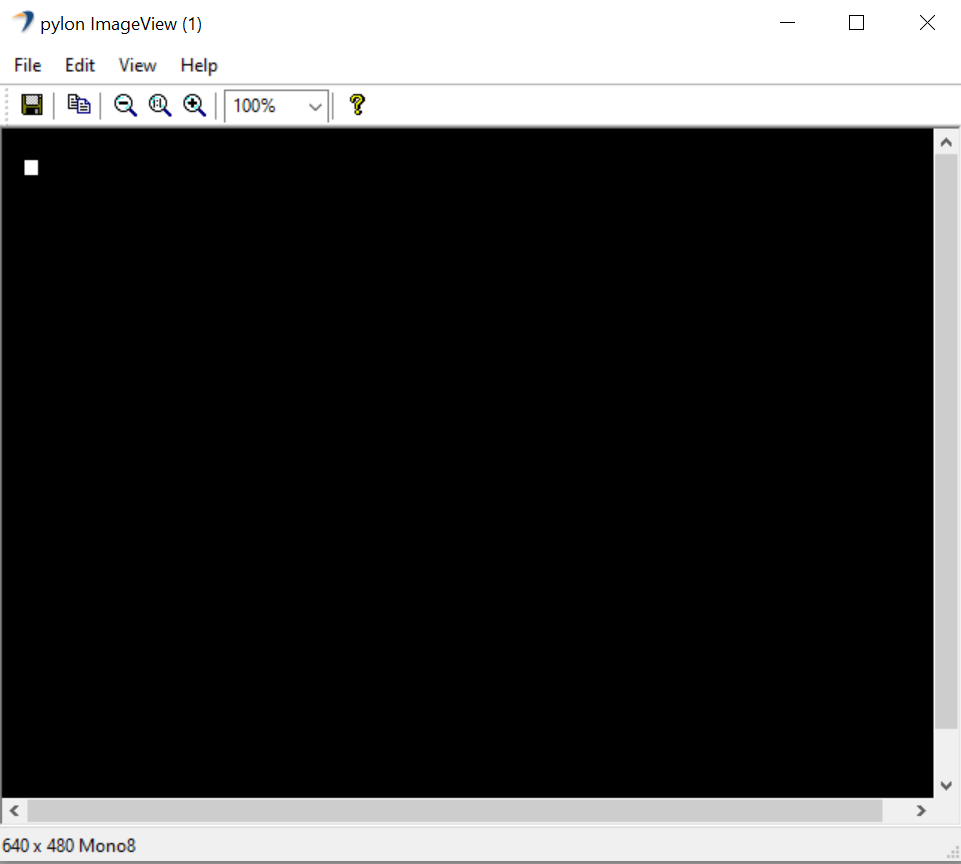

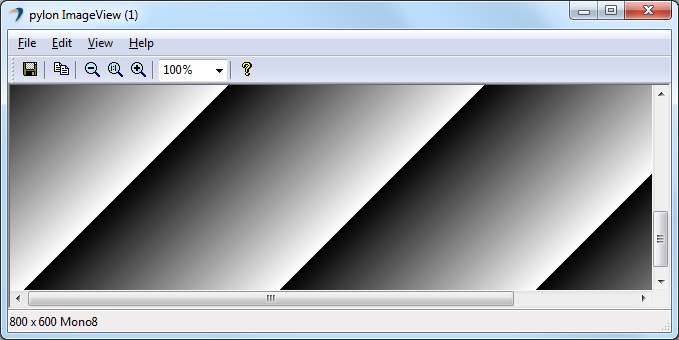

This image shows the region output by the Region Morphology vTool.

Code#

The CGenericOutputObserver class is used to receive the output data from the recipe.

The CRecipe class is used to create a recipe object representing a recipe file that is created using the pylon Viewer Workbench.

The recipe.Load() method is used to load a recipe file.

The recipe.RegisterAllOutputsObserver() method is used to register the CGenericOutputObserver object, which is used for collecting the output data.

The ComputeRegionSize() function is used compute the required size in bytes to store ten run-length-encoded region entries.

A CRegion object is created with a data size large enough to store ten region entries, a reference size of 640 * 480 pixels, and a bounding box starting at the pixel position X=15, Y=21 with a height of 10 pixels and a width of 10 pixels.

To access the individual region entries, the region buffer is accessed using the region.GetBuffer() method.

Each run-length-encoded region entry is defined by a start X position and an end X Position and a Y position. These are set for all entries in a loop.

The attributes of the region created are queried using the inputRegion.GetReferenceHeight(), inputRegion.GetReferenceWidth(), inputRegion.GetBoundingBoxTopLeftX(), inputRegion.GetBoundingBoxTopLeftY(), inputRegion.GetBoundingBoxHeight(), inputRegion.GetBoundingBoxWidth(), and inputRegion.GetDataSize() methods and then printed to the console.

The recipe.Start() method is called to start the processing.

The region created is pushed to the recipe's input pin called Regions using the recipe.TriggerUpdate() method.

The resultCollector.RetrieveResult() method is used to retrieve the processed results.

The attributes of all resulting regions and their region entries are printed to the console.

The recipe.Stop() method is called to stop the processing.

Applicable Interfaces#

- GigE Vision

- USB3 Vision

- CXP

C++ Samples#

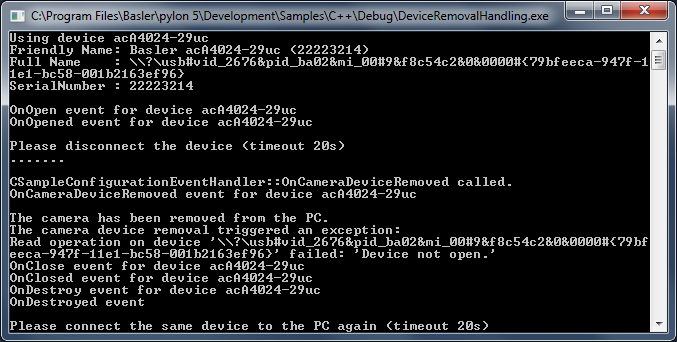

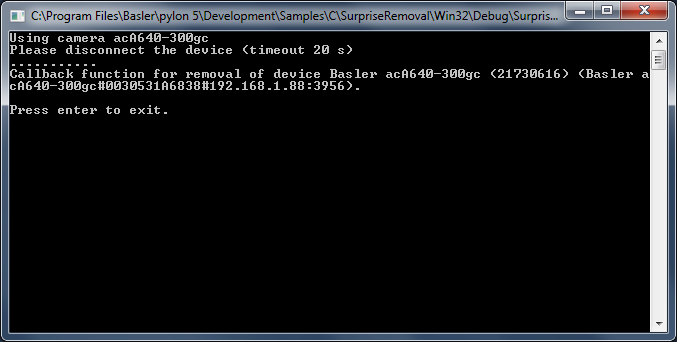

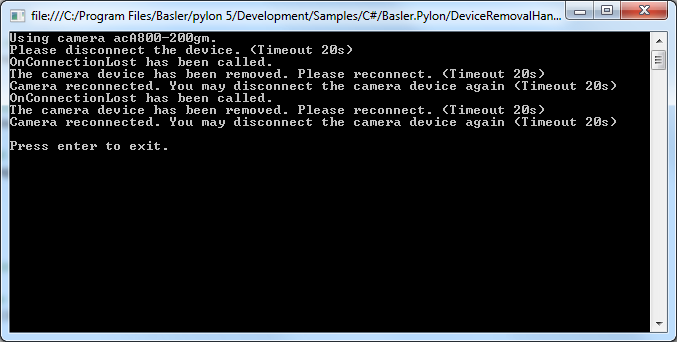

DeviceRemovalHandling#

This sample demonstrates how to detect the removal of a camera device. It also shows you how to reconnect to a removed device.

Info

If you build this sample in debug mode and run it using a GigE camera device, pylon will set the heartbeat timeout to 5 minutes. This is done to allow debugging and single-stepping without losing the camera connection due to missing heartbeats. However, with this setting, it would take 5 minutes for the application to notice that a GigE device has been disconnected. As a workaround, the heartbeat timeout is set to 1000 ms.

Code#

Info

You can find the sample code here.

The CTlFactory class is used to create a generic transport layer.

The CInstantCamera class is used to create an Instant Camera object with the first camera device found.

The CHeartbeatHelper class is used to set the HeartbeatTimeout to an appropriate value.

The CSampleConfigurationEventHandler is used to handle device removal events.

Applicable Interfaces#

- GigE Vision

- USB3 Vision

- Camera Link

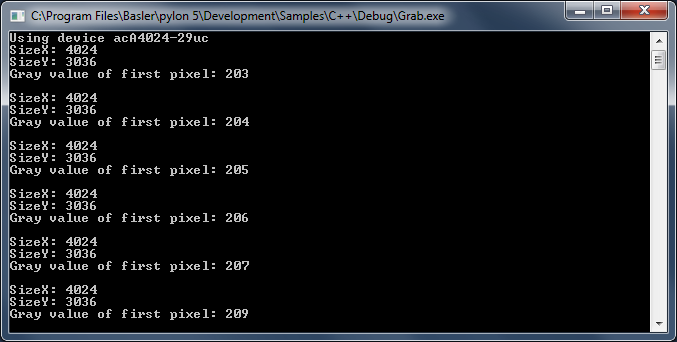

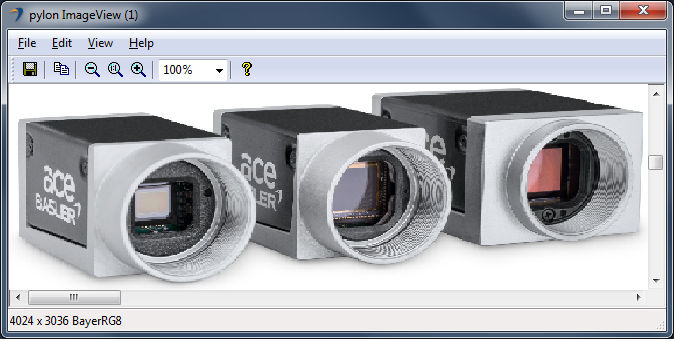

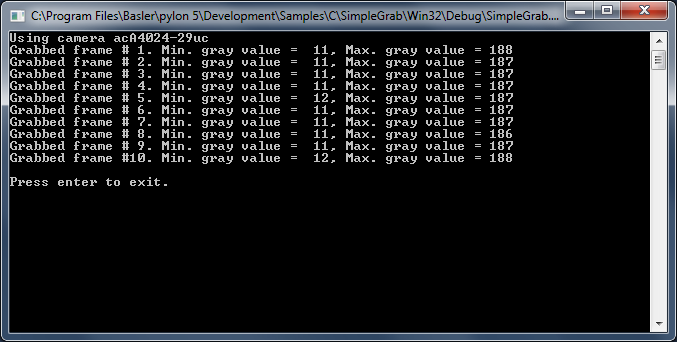

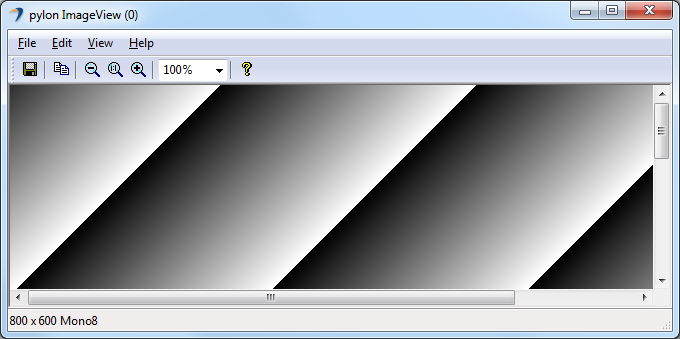

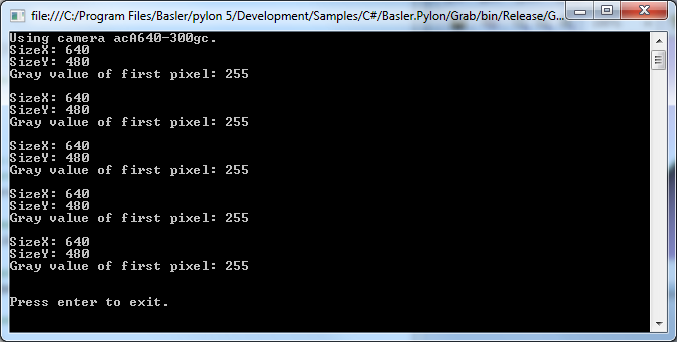

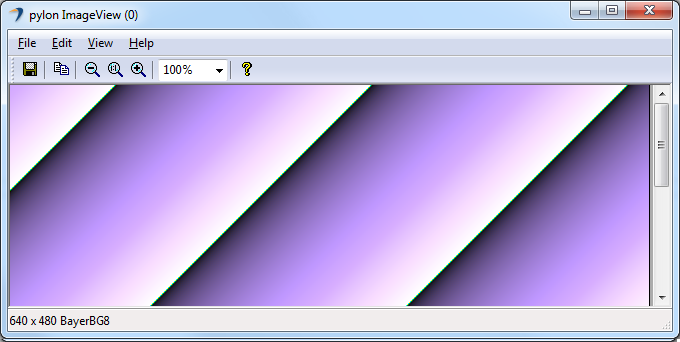

Grab#

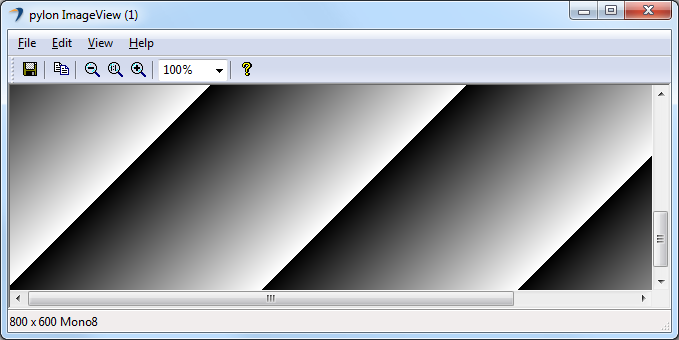

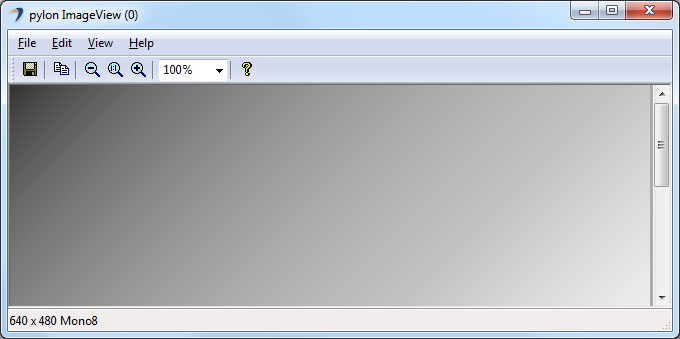

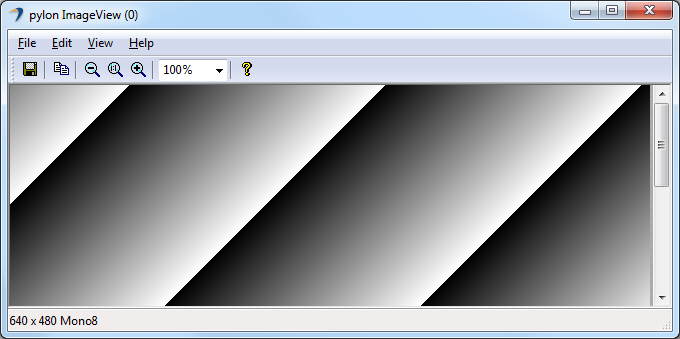

This sample demonstrates how to grab and process images using the CInstantCamera class.

The images are grabbed and processed asynchronously, i.e., at the same time that the application is processing a buffer, the acquisition of the next buffer takes place.

The CInstantCamera class uses a pool of buffers to retrieve image data from the camera device. Once a buffer is filled and ready, the buffer can be retrieved from the camera object for processing. The buffer and additional image data are collected in a grab result. The grab result is held by a smart pointer after retrieval. The buffer is automatically reused when explicitly released or when the smart pointer object is destroyed.

Code#

Info

You can find the sample code here.

The CInstantCamera class is used to create an Instant Camera object with the first camera device found.

The CGrabResultPtr class is used to initialize a smart pointer that will receive the grab result data. It controls the reuse and lifetime of the referenced grab result. When all smart pointers referencing a grab result go out of scope, the referenced grab result is reused or destroyed. The grab result is still valid after the camera object it originated from has been destroyed.

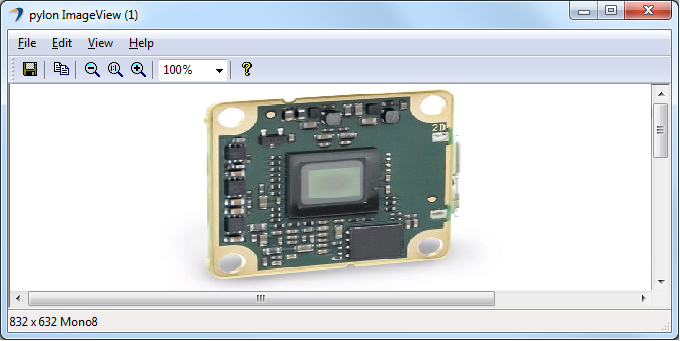

The DisplayImage class is used to display the grabbed images.

Applicable Interfaces#

- GigE Vision

- USB3 Vision

- CXP

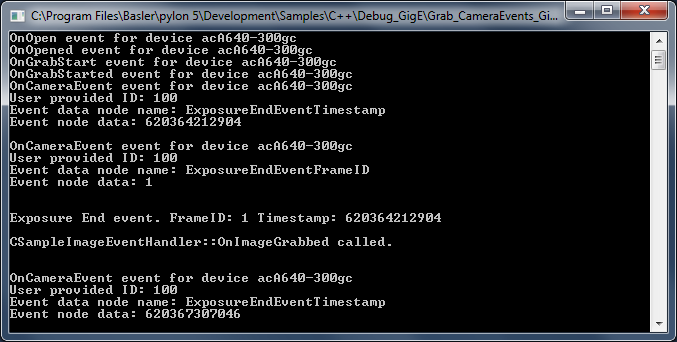

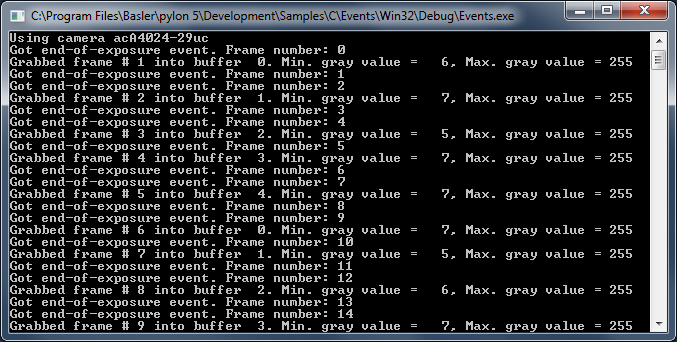

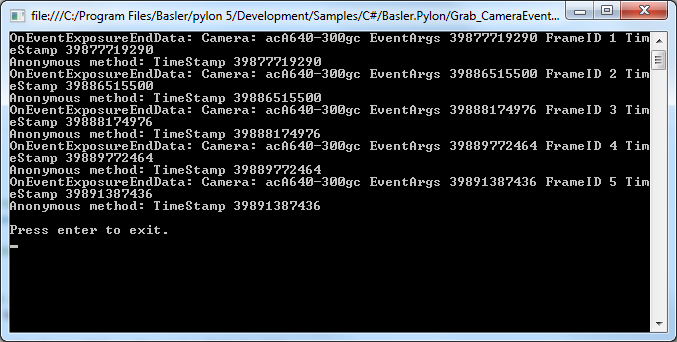

Grab_CameraEvents#

Basler USB3 Vision and GigE Vision cameras can send event messages. For example, when a sensor exposure has finished, the camera can send an Exposure End event to the computer. The event can be received by the computer before the image data of the finished exposure has been transferred completely. This sample demonstrates how to be notified when camera event message data is received.

The event messages are automatically retrieved and processed by the InstantCamera classes. The information carried by event messages is exposed as parameter nodes in the camera node map and can be accessed like standard camera parameters. These nodes are updated when a camera event is received. You can register camera event handler objects that are triggered when event data has been received.

These mechanisms are demonstrated for the Exposure End and the Event Overrun events.

The Exposure End event carries the following information:

- ExposureEndEventFrameID: Number of the image that has been exposed.

- ExposureEndEventTimestamp: Time when the event was generated.

- ExposureEndEventStreamChannelIndex: Number of the image data stream used to transfer the image. On Basler cameras, this parameter is always set to 0.

The Event Overrun event is sent by the camera as a warning that events are being dropped. The notification contains no specific information about how many or which events have been dropped.

Events may be dropped if events are generated at a high frequency and if there isn't enough bandwidth available to send the events.

This sample also shows you how to register event handlers that indicate the arrival of events sent by the camera. For demonstration purposes, different handlers are registered for the same event.

Info

Different camera families implement different versions of the Standard Feature Naming Convention (SFNC). That's why the name and the type of the parameters used can be different.

Code#

Info

You can find the sample code here.

The CBaslerUniversalInstantCamera class is used to create a camera object with the first camera device found independent of its interface.

The CSoftwareTriggerConfiguration class is used to register the standard configuration event handler for enabling software triggering. The software trigger configuration handler replaces the default configuration handler.

The CSampleCameraEventHandler class demonstrates the use of example handlers for camera events.

The CSampleImageEventHandler class demonstrates the use of an image event handler.

The CGrabResultPtr class is used to initialize a smart pointer that will receive the grab result data. It controls the reuse and lifetime of the referenced grab result. When all smart pointers referencing a grab result go out of scope, the referenced grab result is reused or destroyed. The grab result is still valid after the camera object it originated from has been destroyed.

Applicable Interfaces#

- GigE Vision

- USB3 Vision

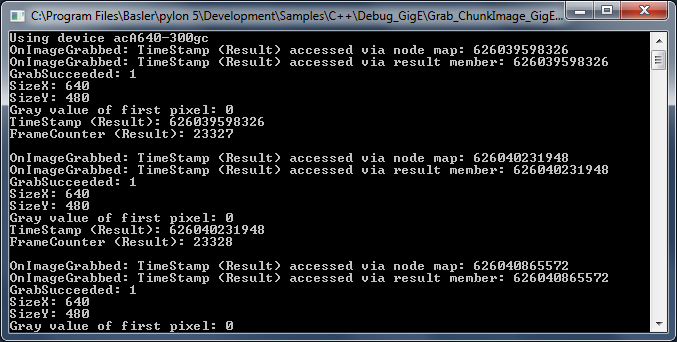

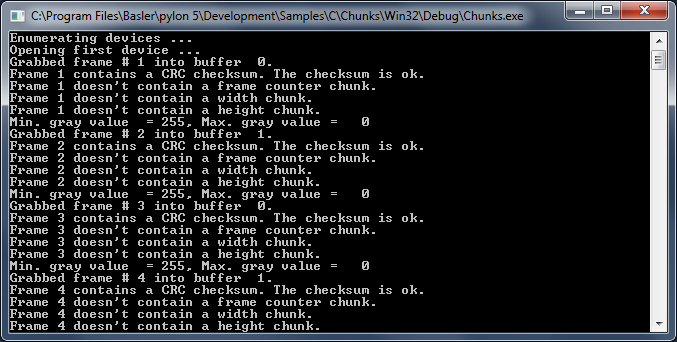

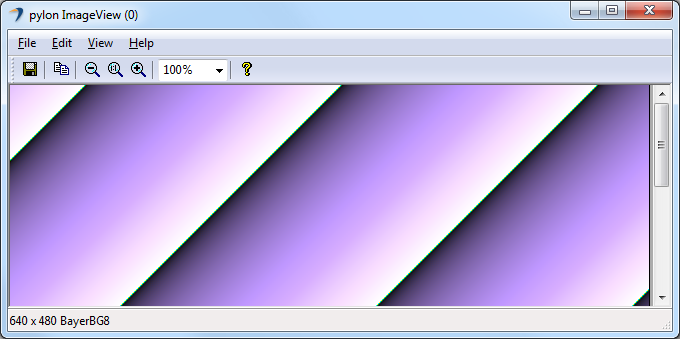

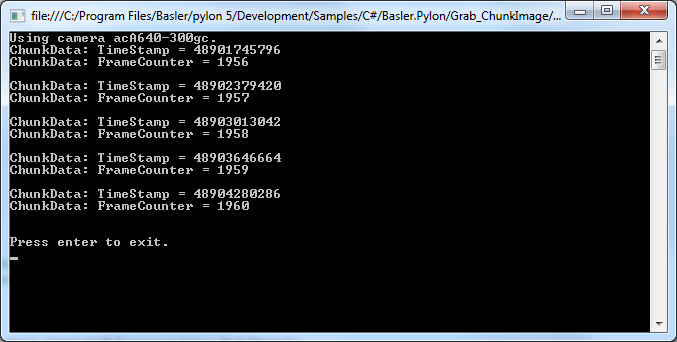

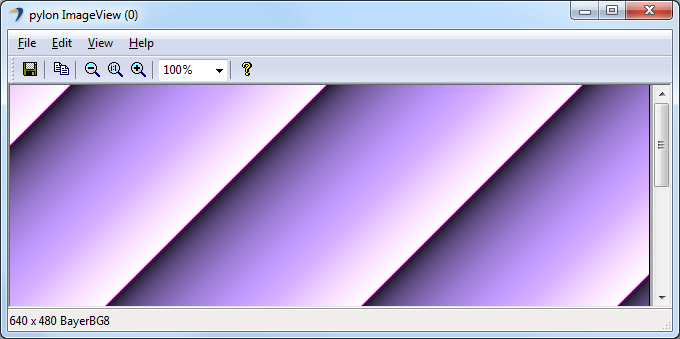

Grab_ChunkImage#

Basler cameras supporting the Data Chunk feature can generate supplementary image data, e.g., frame count, time stamp, or CRC checksums, and append it to each acquired image.

This sample demonstrates how to enable the Data Chunks feature, how to grab images, and how to process the appended data. When the camera is in chunk mode, it transfers data blocks that are partitioned into chunks. The first chunk is always the image data. The data chunks that you have chosen follow the image data chunk.

Code#

Info

You can find the sample code here.

The CBaslerUniversalInstantCamera class is used to create a camera object with the first camera device found independent of its interface.

The CBaslerUniversalGrabResultPtr class is used to initialize a smart pointer that will receive the grab result and chunk data independent of the camera interface.

The CSampleImageEventHandler class demonstrates the use of an image event handler.

The DisplayImage class is used to display the grabbed images.

Applicable Interfaces#

- GigE Vision

- USB3 Vision

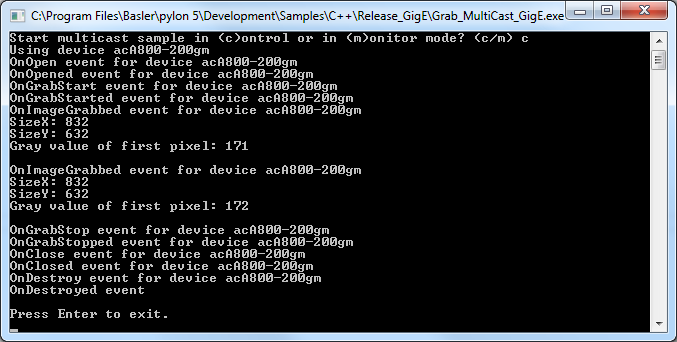

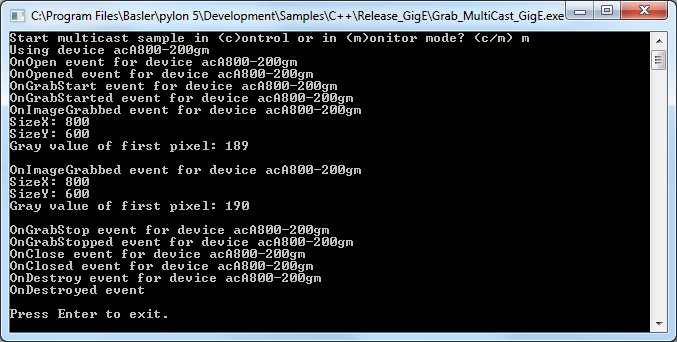

Grab_MultiCast#

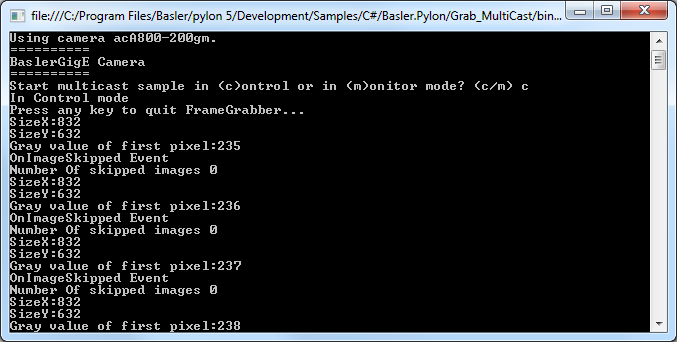

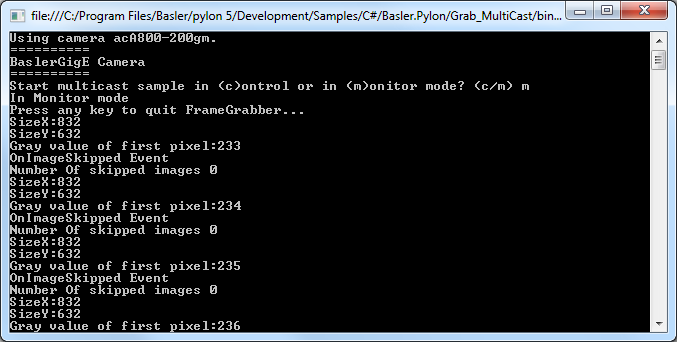

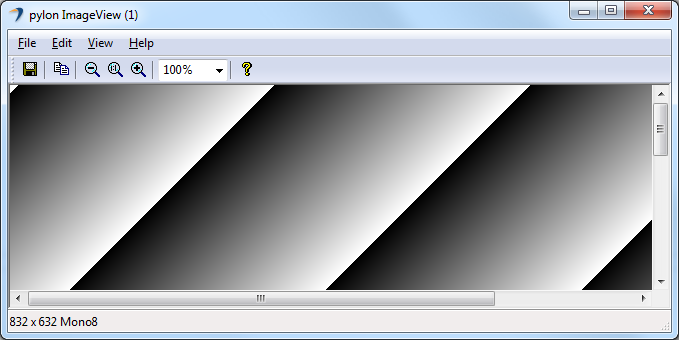

This sample applies to Basler GigE Vision cameras only and demonstrates how to open a camera in multicast mode and how to receive a multicast stream.

Two instances of an application must be run simultaneously on different computers. The first application started on computer A acts as the controlling application and has full access to the GigE camera. The second instance started on computer B opens the camera in monitor mode. This instance is not able to control the camera but can receive multicast streams.

To run the sample, start the application on computer A in control mode. After computer A has begun to receive frames, start the second instance of this application on computer B in monitor mode.

Code#

Info

You can find the sample code here.

The CDeviceInfo class is used to look for cameras with a specific interface, i.e., GigE Vision only (BaslerGigEDeviceClass).

The CBaslerUniversalInstantCamera class is used to find and create a camera object for the first GigE camera found.

When the camera is opened in control mode, the transmission type must be set to "multicast". In this case, the IP address and the IP port must also be set. This is done by the following command:

camera.GetStreamGrabberParams().TransmissionType = TransmissionType_Multicast;

When the camera is opened in monitor mode, i.e., the camera is already controlled by another application and configured for multicast, the active camera configuration can be used. In this case, the IP address and IP port will be set automatically:

camera.GetStreamGrabberParams().TransmissionType = TransmissionType_UseCameraConfig;

RegisterConfiguration() is used to remove the default camera configuration. This is necessary when a monitor mode is selected because the monitoring application is not allowed to modify any camera parameter settings.

The CConfigurationEventPrinter and CImageEventPrinter classes are used for information purposes to print details about events being called and image grabbing.

The CGrabResultPtr class is used to initialize a smart pointer that will receive the grab result data. It controls the reuse and lifetime of the referenced grab result. When all smart pointers referencing a grab result go out of scope, the referenced grab result is reused or destroyed. The grab result is still valid after the camera object it originated from has been destroyed.

Applicable Interfaces#

- GigE Vision

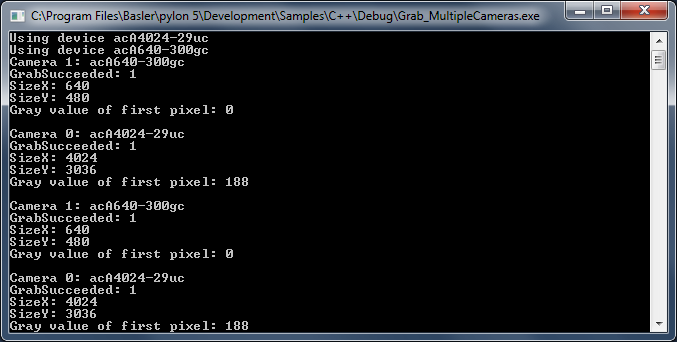

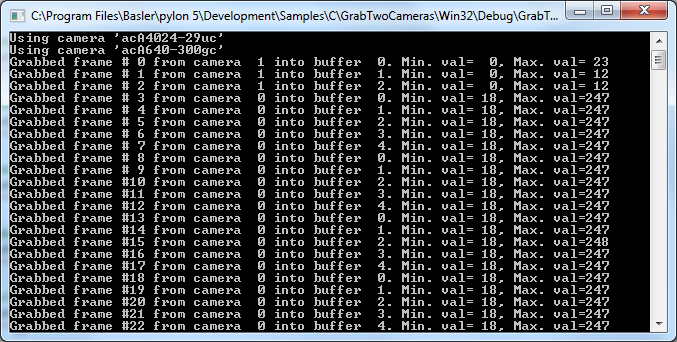

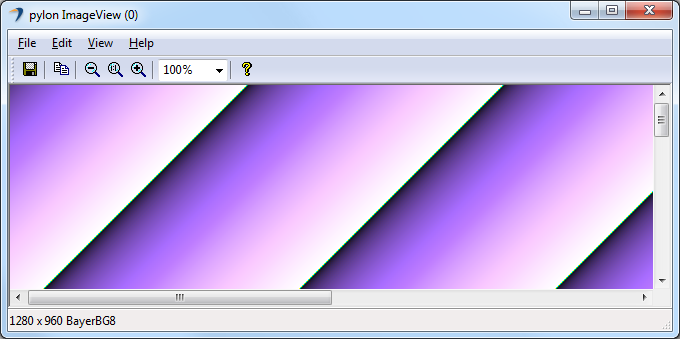

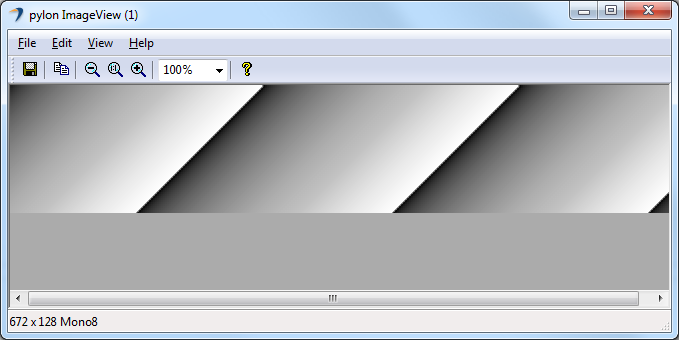

Grab_MultipleCameras#

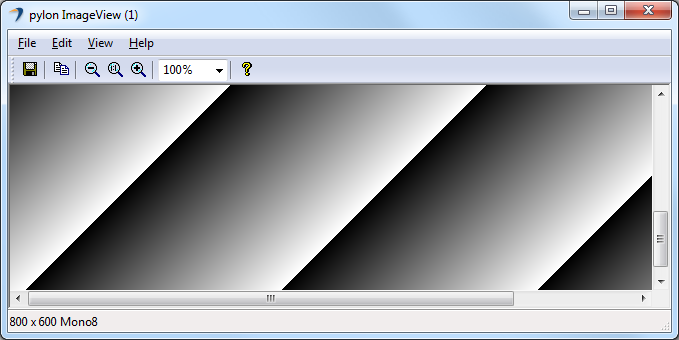

This sample demonstrates how to grab and process images from multiple cameras using the CInstantCameraArray class. The CInstantCameraArray class represents an array of Instant Camera objects. It provides almost the same interface as the Instant Camera for grabbing.

The main purpose of CInstantCameraArray is to simplify waiting for images and camera events of multiple cameras in one thread. This is done by providing a single RetrieveResult method for all cameras in the array.

Alternatively, the grabbing can be started using the internal grab loop threads of all cameras in the CInstantCameraArray. The grabbed images can then be processed by one or more image event handlers. Note that this is not shown in this sample.

Code#

Info

You can find the sample code here.

The CInstantCameraArray class demonstrates how to create an array of Instant Cameras for the devices found.

StartGrabbing() starts grabbing sequentially for all cameras, starting with index 0, 1, etc.

The CGrabResultPtr class is used to initialize a smart pointer that will receive the grab result data. It controls the reuse and lifetime of the referenced grab result. When all smart pointers referencing a grab result go out of scope, the referenced grab result is reused or destroyed. The grab result is still valid after the camera object it originated from has been destroyed.

The DisplayImage class is used to show the image acquired by each camera in a separate window for each camera.

Applicable Interfaces#

- GigE Vision

- USB3 Vision

- CXP

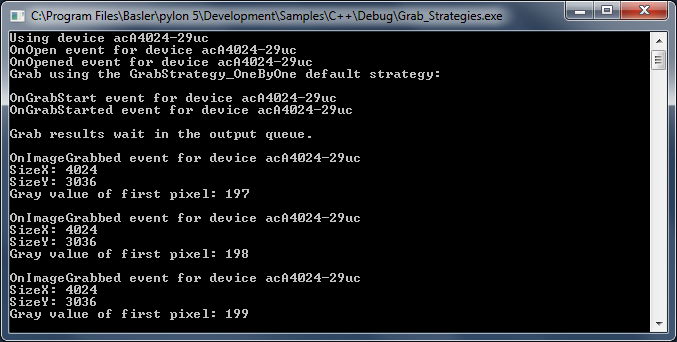

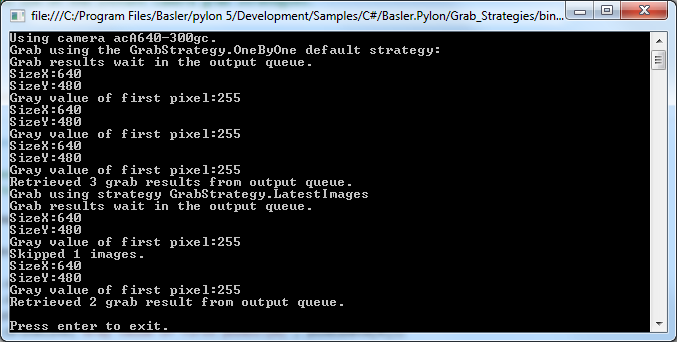

Grab_Strategies#

This sample demonstrates the use of the following CInstantCamera grab strategies:

- GrabStrategy_OneByOne

- GrabStrategy_LatestImageOnly

- GrabStrategy_LatestImages

- GrabStrategy_UpcomingImage

When the "OneByOne" grab strategy is used, images are processed in the order of their acquisition. This strategy can be useful when all grabbed images need to be processed, e.g., in production and quality inspection applications.

The "LatestImageOnly" and "LatestImages" strategies can be useful when the acquired images are only displayed on screen. If the processor has been busy for a while and images could not be displayed automatically, the latest image is displayed when processing time is available again.

The "UpcomingImage" grab strategy can be used to make sure to get an image that has been grabbed after RetrieveResult() has been called. This strategy cannot be used with USB3 Vision cameras.

Code#

Info

You can find the sample code here.

The CInstantCamera class is used to create an Instant Camera object with the first camera device found.

The CGrabResultPtr class is used to initialize a smart pointer that will receive the grab result data. It controls the reuse and lifetime of the referenced grab result. When all smart pointers referencing a grab result go out of scope, the referenced grab result is reused or destroyed. The grab result is still valid after the camera object it originated from has been destroyed.

The CSoftwareTriggerConfiguration class is used to register the standard configuration event handler for enabling software triggering. The software trigger configuration handler replaces the default configuration. StartGrabbing() is used to demonstrate the usage of the different grab strategies.

Applicable Interfaces#

- GigE Vision

- USB3 Vision

- CXP

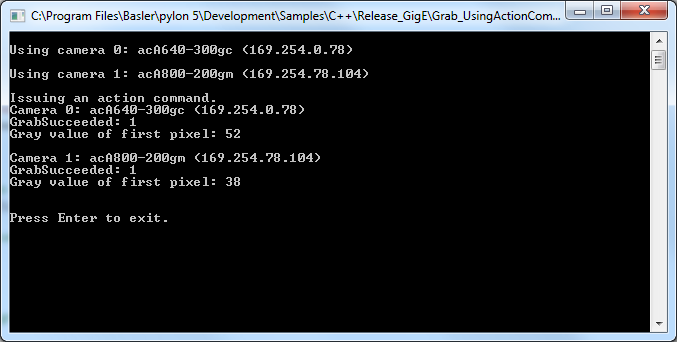

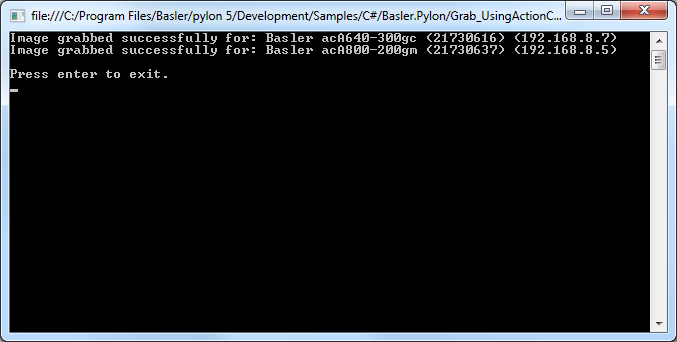

Grab_UsingActionCommand#

This sample applies to Basler GigE Vision cameras only and demonstrates how to issue a GigE Vision ACTION_CMD to multiple cameras.

By using an action command, multiple cameras can be triggered at the same time as opposed to software triggering where each camera must be triggered individually.

Code#

Info

You can find the sample code here.

To make the configuration of multiple cameras easier, this sample uses the CBaslerUniversalInstantCameraArray class.

The IGigETransportLayer interface is used to issue action commands.

The CActionTriggerConfiguration class is used to set up the basic action command features.

The CBaslerUniversalGrabResultPtr class is used to declare and initialize a smart pointer to receive the grab result data. When the cameras in the array are created, a camera context value is assigned to the index number of the camera in the array. The camera context is a user-settable value, which is attached to each grab result and can be used to determine the camera that produced the grab result, i.e., ptrGrabResult->GetCameraContext().

The DisplayImage class is used to display the grabbed images.

Applicable Interfaces#

- GigE Vision

Grab_UsingAsyncGatedTriggerMultiframe#

The sample demonstrates how to use Overflow events and asynchronous gated triggers (Multiframe) in line scan applications where you want to capture images that are composed of a sequence of multiple buffers. This sample applies when using software triggering.

Typically, this is useful in applications where a gate is used to mark the end of an image, e.g., a light barrier is used to determine whether there is an object on a conveyor belt or whether the conveyor belt is empty. This results in the camera being triggered and the lines being appended on the frame grabber.

Using the image ROI parameters, you can specify the buffer size. For example, you can set the width and the height to 8192.

In this example, the signal at the gate is high for 3 lines. So, three lines should be combined on the frame grabber to form one image. Because the buffer is larger than this (8192 x 8192), you must use GetPayloadSize() to determine the actual height of the image in order to reconstruct it correctly.

The following animation shows this example.

If the signal at the gate were high for 16384 lines, however, this would cause RAM usage and latency to increase so much that inspection tasks become virtually impossible.

For cases like this, you can define a smaller ROI, e.g., 8192 x 1024. This would result in several images that all belong to one sequence. For example, if the signal at the gate is high for 5000 lines, you will get 5 images, where the first four have a height of 1024 and the last one a height of 904.

Again, it is necessary to calculate the actual height by using the GetPayloadSize() method. Furthermore, to know whether the images belong to the same sequence, the isEndOfSequence event must be read out.

The following animation shows a simplified version of this scenario with the height set to 2.

For this event, it is necessary to register a callback event. Because this event is in the frame grabber, it is also necessary to call the unregister function manually to make it thread-safe.

Configuring the Camera#

- Create and open a camera.

- Set the

TestPatternenumeration node to pattern6. - Set

AutomaticROIControltoOff. - Set the

EventSelectortransport layer node to theOverflowevent. - Set the

EventNotificationtransport layer node toOnto enable event notifications for overflows on the frame grabber. - Set the

OverflowEventSelecttransport layer node toAllto get notifications for all events. - Set the

ImageTriggerModetransport layer node toAsyncGatedTriggerMultiframe. - Set the

ImageTriggerInputSourcetransport layer node toSoftwareTrigger. - Set the

SetSoftwareTriggertransport layer node to0. - Start grabbing on the camera.

Event Handling Callback#

- If grabbing is not terminated, get the pNode parameter's children.

- If the node name is

EventOverflowFrameId, store the frame ID. - If the node name is

EventOverflowIsEndOfSequencestore the sequence end.

Key Transport Layer Parameters#

| Node | Purpose |

|---|---|

| EventSelector | Sets the event notification to be enabled. |

| EventNotification | Enables event notifications for the event currently selected by the EventSelector node. |

| OverflowEventSelect | Sets which overflow events result in notifications. |

| ImageTriggerMode | Sets the image trigger mode, e.g., AsyncGatedTriggerMultiframe, AsyncGatedTrigger, AsyncExternalTriggerMultiframe. |

Applicable Interfaces#

- CXP

Grab_UsingAsyncGatedTriggerMultiframe_Hardware#

The sample demonstrates how to use Overflow events and asynchronous gated triggers (Multiframe) in line scan applications where you want to capture images that are composed of a sequence of multiple buffers. This sample applies when using hardware triggering.

Typically, this is useful in applications where a gate is used to mark the end of an image, e.g., a light barrier is used to determine whether there is an object on a conveyor belt or whether the conveyor belt is empty. This results in the camera being triggered and the lines being appended on the frame grabber.

Using the image ROI parameters, you can specify the buffer size. For example, you can set the width and the height to 8192.

In this example, the signal at the gate is high for 3 lines. So, three lines should be combined on the frame grabber to form one image. Because the buffer is larger than this (8192 x 8192), you must use GetPayloadSize() to determine the actual height of the image in order to reconstruct it correctly.

The following animation shows this example.

If the signal at the gate were high for 16384 lines, however, this would cause RAM usage and latency to increase so much that inspection tasks become virtually impossible.

For cases like this, you can define a smaller ROI, e.g., 8192 x 1024. This would result in several images that all belong to one sequence. For example, if the signal at the gate is high for 5000 lines, you will get 5 images, where the first four have a height of 1024 and the last one a height of 904.

Again, it is necessary to calculate the actual height by using the GetPayloadSize() method. Furthermore, to know whether the images belong to the same sequence, the isEndOfSequence event must be read out.

The following animation shows a simplified version of this scenario with the height set to 2.

For this event, it is necessary to register a callback event. Because this event is in the frame grabber, it is also necessary to call the unregister function manually to make it thread-safe.

Configuring the Camera#

- Create and open a camera.

- Set the

TestPatternenumeration node to pattern6. - Set

AutomaticROIControltoOff. - Set the

EventSelectortransport layer node to theOverflowevent. - Set the

EventNotificationtransport layer node toOnto enable event notifications for overflows on the frame grabber. - Set the

OverflowEventSelecttransport layer node toAllto get notifications for all events. - Register the event notification callback at the

EventOverflowDatatransport layer node. - Set the

ImageTriggerModetransport layer node toAsyncGatedTriggerMultiframe. - Set the

LineSelectornode to line2andLineModetoInputon the camera. - Set the

LineSelectornode to line2andLineModetoInputon the camera. - Set the

TriggerSelectornode toLineStartandTriggerModetoOnon the camera. - Set the

ImageTriggerInputSourcetransport layer node toTriggerInSourceFrontGPI3. - Start grabbing on the camera.

Event Handling Callback#

- If grabbing is not terminated, get the pNode parameter's children.

- If the node name is

EventOverflowFrameId, store the frame ID. - If the node name is

EventOverflowIsEndOfSequencestore the sequence end.

Key Transport Layer Parameters#

| Node | Purpose |

|---|---|

| EventSelector | Sets the event notification to be enabled. |

| EventNotification | Enables event notifications for the event currently selected by the EventSelector node. |

| OverflowEventSelect | Sets which overflow events result in notifications. |

| ImageTriggerMode | Sets the image trigger mode, e.g., AsyncGatedTriggerMultiframe, AsyncGatedTrigger, AsyncExternalTriggerMultiframe. |

Applicable Interfaces#

- CXP

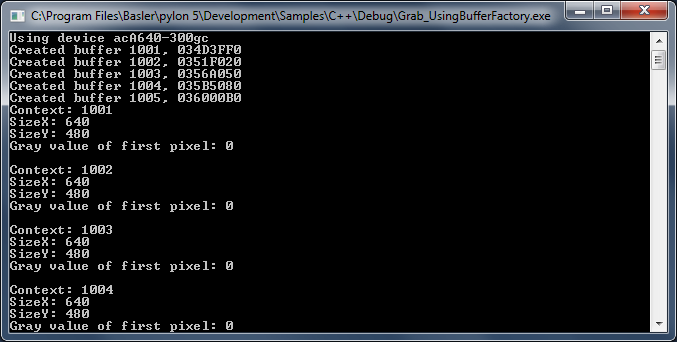

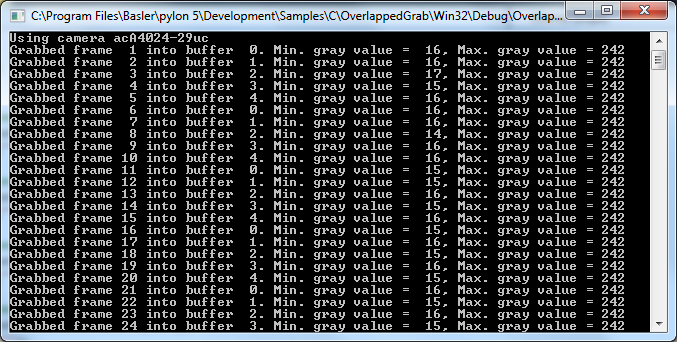

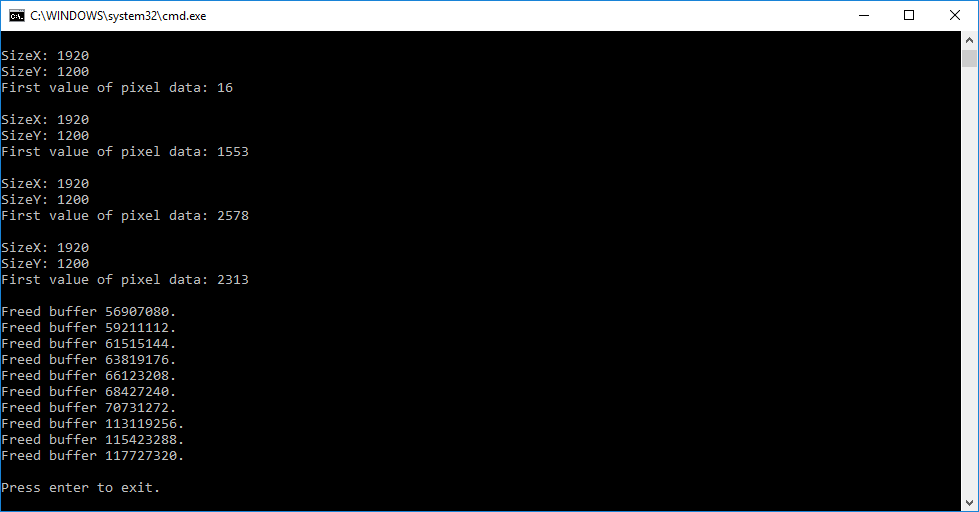

Grab_UsingBufferFactory#

This sample demonstrates the use of a user-provided buffer factory.

The use of a buffer factory is optional and intended for advanced use cases only. A buffer factory is only required if you plan to grab into externally supplied buffers.

Code#

Info

You can find the sample code here.

The MyBufferFactory class demonstrates the use of a user-provided buffer factory.

The buffer factory must be created first because objects on the stack are destroyed in reverse order of creation. The buffer factory must exist longer than the Instant Camera object in this sample.

The CInstantCamera class is used to create an Instant Camera object with the first camera device found.

SetBufferFactory() provides its own implementation of a buffer factory. Since we control the lifetime of the factory object, we pass the Cleanup_None argument.

Applicable Interfaces#

- GigE Vision

- USB3 Vision

- CXP

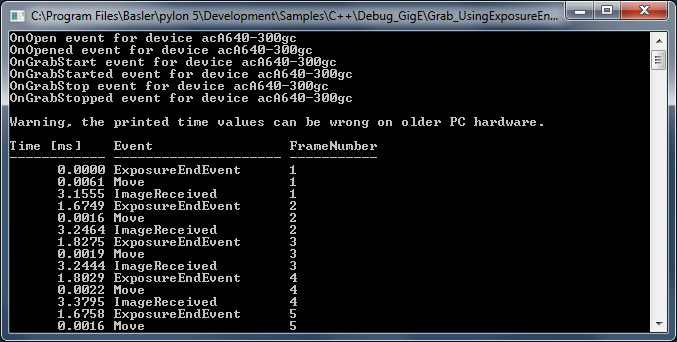

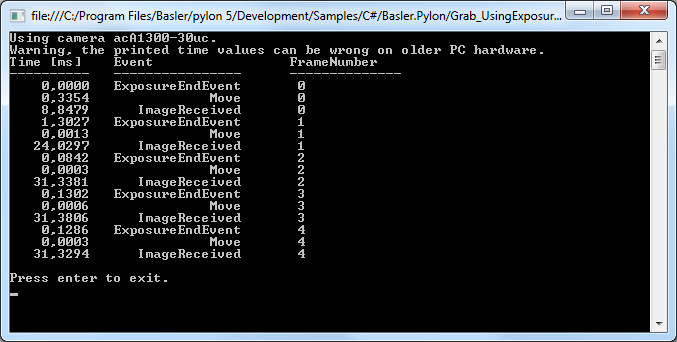

Grab_UsingExposureEndEvent#

This sample demonstrates how to use the Exposure End event to speed up image acquisition.

For example, when a sensor exposure is finished, the camera can send an Exposure End event to the computer.

The computer can receive the event before the image data of the finished exposure has been transferred completely.

This can be used in order to avoid an unnecessary delay, e.g., when an imaged object is moved before the related image data transfer is complete.

Code#

Info

You can find the sample code here.

The MyEvents enumeration is used for distinguishing between different events, e.g., ExposureEndEvent, FrameStartOvertrigger, EventOverrunEvent, ImageReceivedEvent, MoveEvent, NoEvent.

The CEventHandler class is used to register image and camera event handlers.

Info

Additional handling is required for GigE camera events because the event network packets can be lost, doubled or delayed on the network.

The CBaslerUniversalInstantCamera class is used to create a camera object with the first camera device found independent of its interface.

The CConfigurationEventPrinter class is used for information purposes to print details about camera use.

The CGrabResultPtr class is used to initialize a smart pointer that will receive the grab result data. It controls the reuse and lifetime of the referenced grab result. When all smart pointers referencing a grab result go out of scope, the referenced grab result is reused or destroyed. The grab result is still valid after the camera object it originated from has been destroyed.

Applicable Interfaces#

- GigE Vision

- USB3 Vision

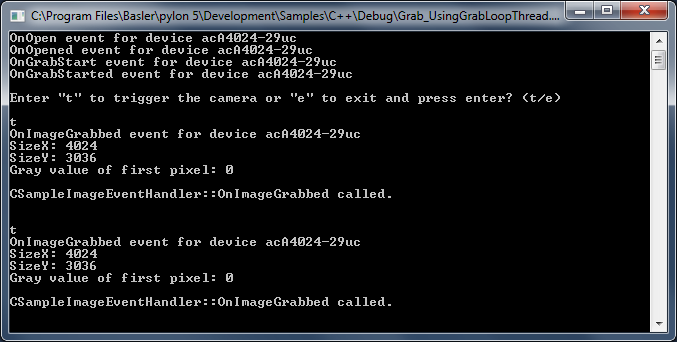

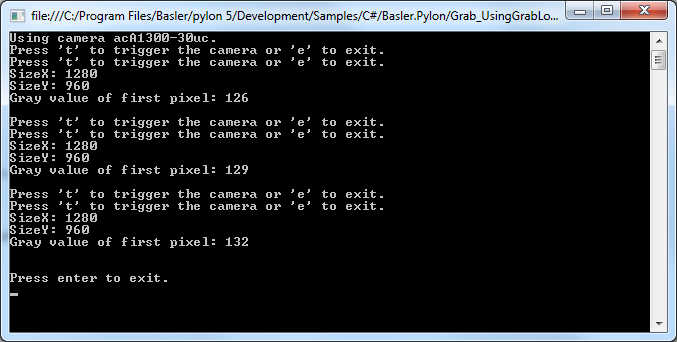

Grab_UsingGrabLoopThread#

This sample demonstrates how to grab and process images using the grab loop thread provided by the CInstantCamera class.

Code#

Info

You can find the sample code here.

The CInstantCamera class is used to create an Instant Camera object with the first camera device found.

The CSoftwareTriggerConfiguration class is used to register the standard configuration event handler for enabling software triggering. The software trigger configuration handler replaces the default configuration.

The CConfigurationEventPrinter class is used for information purposes to print details about camera use.

The CImageEventPrinter class serves as a placeholder for an image processing task. When using the grab loop thread provided by the Instant Camera object, an image event handler processing the grab results must be created and registered.

CanWaitForFrameTriggerReady() is used to query the camera device whether it is ready to accept the next frame trigger.

StartGrabbing() demonstrates how to start grabbing using the grab loop thread by setting the grabLoopType parameter to GrabLoop_ProvidedByInstantCamera. The grab results are delivered to the image event handlers. The "OneByOne" default grab strategy is used in this case.

WaitForFrameTriggerReady() is used to wait up to 500 ms for the camera to be ready for triggering.

ExecuteSoftwareTrigger() is used to execute the software trigger.

The DisplayImage class is used to display the grabbed images.

Applicable Interfaces#

- GigE Vision

- USB3 Vision

- CXP

Grab_UsingOverflowEvent#

This sample combines software triggering with evaluation of Overflow events on Basler CXP frame grabbers. Images are acquired asynchronously by CInstantCamera, and each grab result is matched with the corresponding EventOverflowFrameId/EventOverflowIsEndOfSequence tag so the application knows precisely when a gated multiframe sequence has finished.

Configuring the Camera#

- Initialise pylon, create the first available device, and open it.

- Configure the following the parameters on the camera and the frame grabber:

TestPattern=6AutomaticROIControl=falseWidth/HeightImageTriggerMode=AsyncGatedTriggerMultiframeImageTriggerInputSource=SoftwareTriggerSetSoftwareTrigger=LowActive

- Enable Overflow events:

- Set

EventSelectortoOverflow - Set

EventNotificationtoOn. - Set

OverflowEventSelecttoAll.

- Set

- Register callback for

EventOverflowData.

The handler extractsEventOverflowFrameIdandEventOverflowIsEndOfSequenceand stores them in a thread‑safe list. - Start grabbing (

StartGrabbing(count)) and run a background task that retrieves eachCGrabResultPtr, aligns it with the oldest pending tag, and prints diagnostics. - Send ten software triggers by toggling

SetSoftwareTriggerin a loop. - Wait for the grab loop to finish, deregister the callback, and terminate pylon.

Key Transport Layer Parameters#

| Node | Purpose |

|---|---|

| EventSelector | Sets the event notification to be enabled. |

| EventNotification | Enables event notifications for the event currently selected by the EventSelector node. |

| OverflowEventSelect | Sets which overflow events result in notifications. |

| ImageTriggerMode | Sets the image trigger mode, e.g., AsyncGatedTriggerMultiframe. |

| ImageTriggerInputSource | Sets the trigger input source. |

| SetSoftwareTrigger | Sets the polarity that enables the trigger. |

Applicable Interfaces#

- CXP

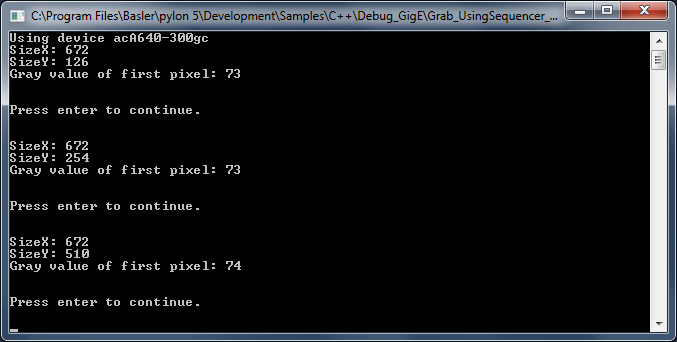

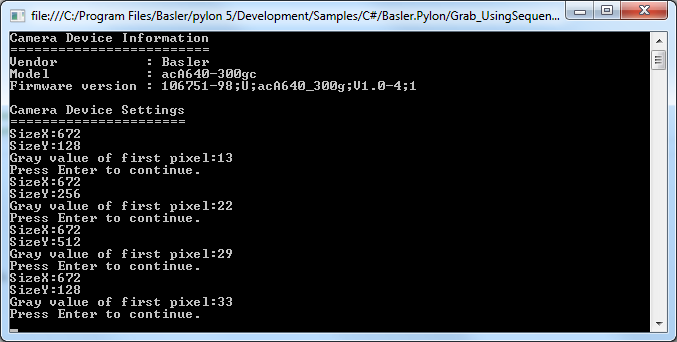

Grab_UsingSequencer#

This sample demonstrates how to grab images using the Sequencer feature of a Basler camera.

Three sequence sets are used for image acquisition. Each sequence set uses a different image height.

Code#

Info

You can find the sample code here.

The CBaslerUniversalInstantCamera class is used to create a camera object with the first camera device found independent of its interface.

The CSoftwareTriggerConfiguration class is used to register the standard configuration event handler for enabling software triggering. The software trigger configuration handler replaces the default configuration.

The CGrabResultPtr class is used to initialize a smart pointer that will receive the grab result data. It controls the reuse and lifetime of the referenced grab result. When all smart pointers referencing a grab result go out of scope, the referenced grab result is reused or destroyed. The grab result is still valid after the camera object it originated from has been destroyed.

The DisplayImage class is used to display the grabbed images.

Applicable Interfaces#

- GigE Vision

- USB3 Vision

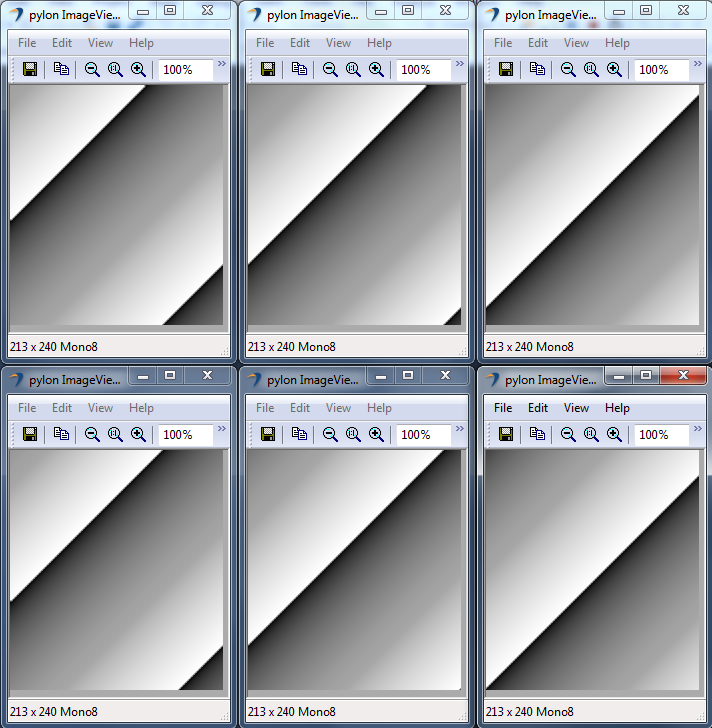

GUI_ImageWindow#

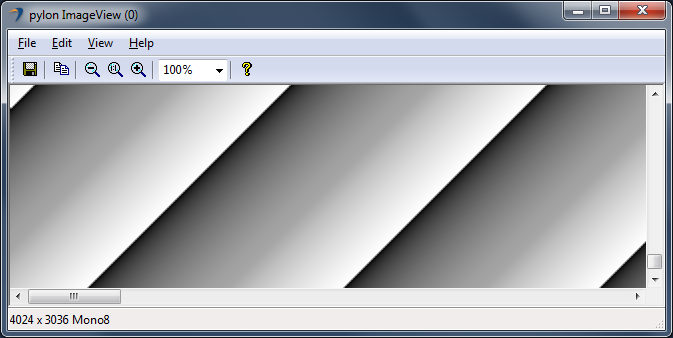

This sample demonstrates how to display images using the CPylonImageWindow class. Here, an image is grabbed and split into multiple tiles. Each tile is displayed in a separate image window.

Code#

Info

You can find the sample code here.

The CPylonImageWindow class is used to create an array of image windows for displaying camera image data.

The CInstantCamera class is used to create an Instant Camera object with the first camera device found.

StartGrabbing() demonstrates how to start the grabbing by applying the GrabStrategy_LatestImageOnly grab strategy. Using this strategy is recommended when images have to be displayed.

The CGrabResultPtr class is used to initialize a smart pointer that will receive the grab result data. It controls the reuse and lifetime of the referenced grab result. When all smart pointers referencing a grab result go out of scope, the referenced grab result is reused or destroyed. The grab result is still valid after the camera object it originated from has been destroyed.

The CPylonImage class is used to split the grabbed image into tiles, which in turn will be displayed in different image windows.

Applicable Interfaces#

- GigE Vision

- USB3 Vision

- CXP

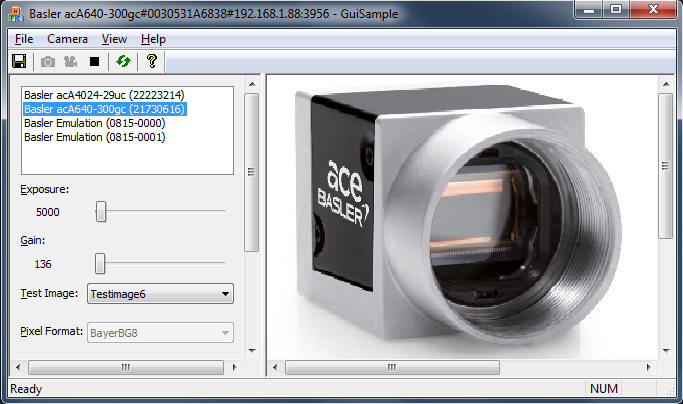

GUI_Sample#

This sample demonstrates the use of a MFC GUI together with the pylon C++ API to enumerate attached cameras, to configure a camera, to start and stop the grab and to display and store grabbed images.

It also shows you how to use GUI controls to display and modify camera parameters.

Code#

Info

You can find the sample code here.

When the Refresh button is clicked, CGuiSampleDoc::OnViewRefresh() is called, which in turn calls CGuiSampleApp::EnumerateDevices() to enumerate all attached devices.

By selecting a camera in the device list, CGuiSampleApp::OnOpenCamera() is called to open the selected camera. The Single Shot (Grab One) and Start (Grab Continuous) buttons as well as the Exposure, Gain, Test Image, and Pixel Format parameters are initialized and enabled now.

By clicking the Single Shot button, CGuiSampleDoc::OnGrabOne() is called. To grab a single image, StartGrabbing() is called with the following arguments:

m_camera.StartGrabbing(1, Pylon::GrabStrategy_OneByOne, Pylon::GrabLoop_ProvidedByInstantCamera);

When the image is received, pylon will call the CGuiSampleDoc::OnImageGrabbed() handler. To display the image, CGuiSampleDoc::OnNewGrabresult() is called.

By clicking the Start button, CGuiSampleDoc::OnStartGrabbing() is called.

To grab images continuously, StartGrabbing() is called with the following arguments:

m_camera.StartGrabbing(Pylon::GrabStrategy_OneByOne, Pylon::GrabLoop_ProvidedByInstantCamera);

In this case, the camera will grab images until StopGrabbing() is called.

When a new image is received, pylon will call the CGuiSampleDoc::OnImageGrabbed() handler. To display the image, CGuiSampleDoc::OnNewGrabresult() is called.

The Stop button gets enabled only after the Start button has been clicked. To stop continuous image acquisition, the Stop button has to be clicked. Upon clicking the Stop button, CGuiSampleDoc::OnStopGrab() is called.

When the Save button is clicked, CGuiSampleDoc::OnFileImageSaveAs() is called and a Bitmap (BMP) image will be saved (BMP is the default file format). Alternatively, the image can be saved in TIFF, PNG, JPEG, or Raw file formats.

Applicable Interfaces#

- GigE Vision

- USB3 Vision

- CXP

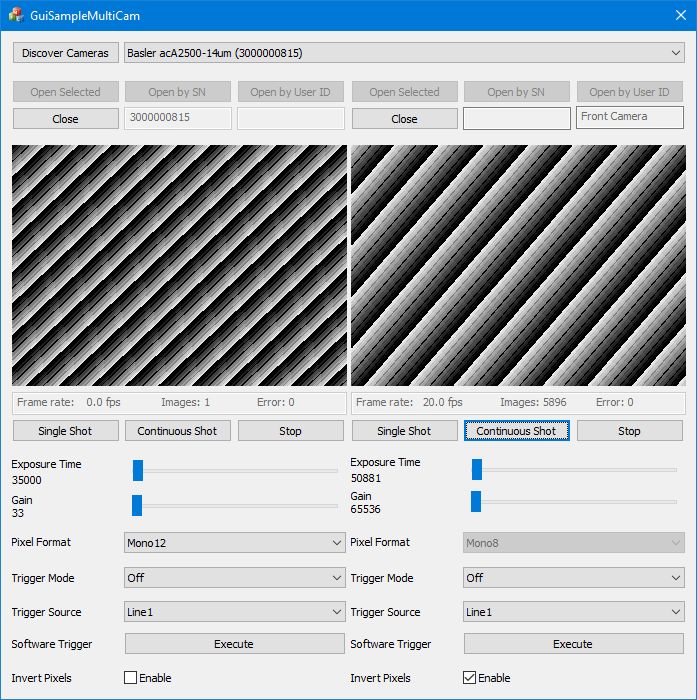

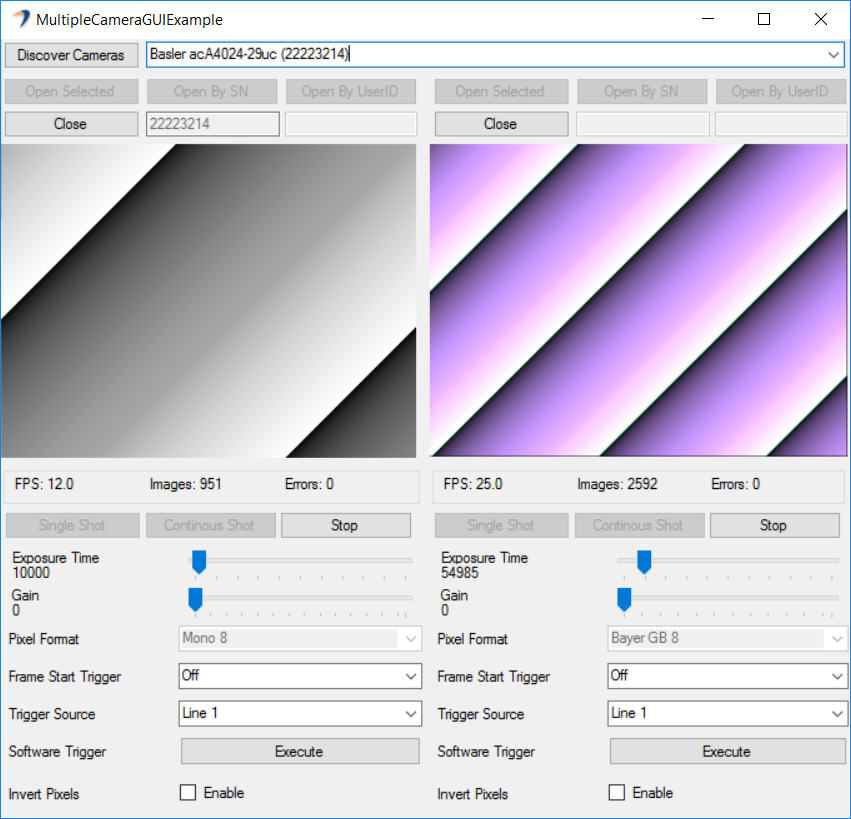

GUI_SampleMultiCam#

This sample demonstrates how to operate multiple cameras using an MFC GUI together with the pylon C++ API.

The sample demonstrates different techniques for opening a camera, e.g., by using its serial number or user device ID. It also contains an image processing example and shows how to handle device disconnections.

The sample covers single and continuous image acquisition using software as well as hardware triggering.

Code#

Info

You can find the sample code here.

When the Discover Cameras button is clicked, the CGuiSampleMultiCamDlg::OnBnClickedButtonScan() function is called, which in turn calls the CGuiSampleMultiCamDlg::EnumerateDevices() function to enumerate all attached devices.

By clicking the Open Selected button, the CGuiSampleMultiCamDlg::InternalOnBnClickedOpenSelected() function is called, which in turn calls the CGuiSampleMultiCamDlg::InternalOpenCamera() function to create a new device info object

Then, the CGuiCamera::CGuiCamera() function is called to create a camera object and open the selected camera. In addition, callback functions for parameter changes are registered, e.g., for Exposure Time, Gain, Pixel Format, etc.

Cameras can be opened by clicking the Open by SN (SN = serial number) or Open by User ID button. The latter assumes that you have already assigned a user ID to the camera, e.g., in the pylon Viewer or via the pylon API.

After a camera has been opened, the following GUI elements become available:

- Single Shot, Continuous Shot, Stop, and Execute (for executing a software trigger) buttons

- Exposure Time and Gain sliders

- Pixel Format, Trigger Mode, and Trigger Source drop-down lists

- Invert Pixels check box

By clicking the Single Shot button, the CGuiCamera::SingleGrab() function is called. To grab a single image, the StartGrabbing() function is called with the following arguments:

m_camera.StartGrabbing(1, Pylon::GrabStrategy_OneByOne, Pylon::GrabLoop_ProvidedByInstantCamera);

When the image is received, pylon will call the CGuiCamera::OnImageGrabbed() handler. To display the image, the CGuiSampleMultiCamDlg::OnNewGrabresult() function is called.

By clicking the Continuous Shot button, the CGuiCamera::ContinuousGrab() function is called. To grab images continuously, the StartGrabbing() function is called with the following arguments:

m_camera.StartGrabbing(Pylon::GrabStrategy_OneByOne, Pylon::GrabLoop_ProvidedByInstantCamera);

In this case, the camera will grab images until StopGrabbing() is called.

When a new image is received, pylon will call the CGuiCamera::OnImageGrabbed() handler. To display the image, the CGuiSampleMultiCamDlg::OnNewGrabresult() function is called.

This sample also demonstrates the triggering of cameras by using a software trigger. For this purpose, the Trigger Mode parameter has to be set to On, and the Trigger Source parameter has to be set to Software. When starting a single or a continuous image acquisition, the camera will then be waiting for a software trigger.

By clicking the Execute button, the CGuiCamera::ExecuteSoftwareTrigger() function will be called, which will execute a software trigger.

For triggering the camera by hardware trigger, set Trigger Mode to On and Trigger Source to, e.g., Line1. When starting a single or a continuous image acquisition, the camera will then be waiting for a hardware trigger.

By selecting the Invert Pixels check box, an example of image processing will be shown. In the example, the pixel data will be inverted. This is done in the CGuiCamera::OnNewGrabResult() function.

Finally, this sample also shows the use of Device Removal callbacks. If an already opened camera is disconnected, the CGuiCamera::OnCameraDeviceRemoved() function is called. In turn, the CGuiSampleMultiCamDlg::OnDeviceRemoved() function will be called to inform the user about the disconnected camera.

Applicable Interfaces#

- GigE Vision

- USB3 Vision

- CoaXPres

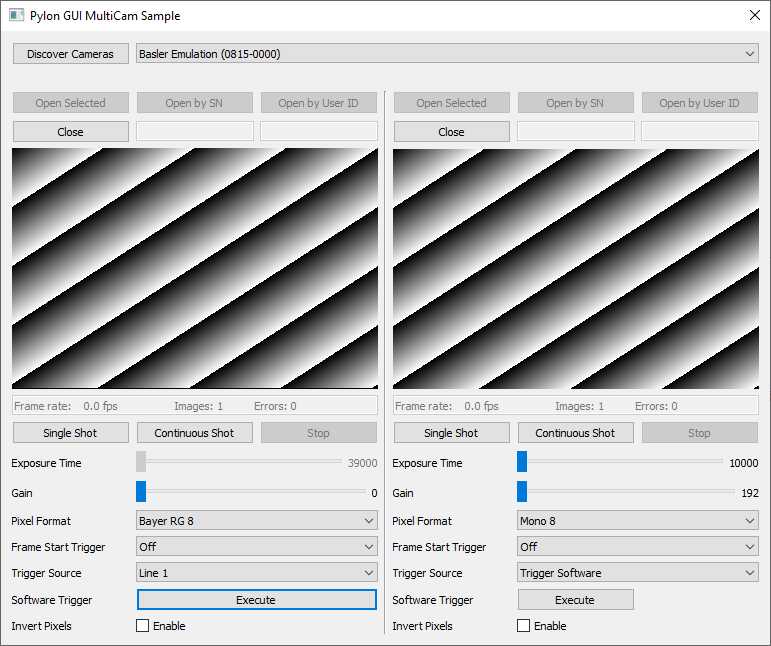

GUI_QtMultiCam#

This sample demonstrates how to operate multiple cameras using a Qt GUI together with the pylon C++ API.

Info

An installation of Qt Creator 5.12 or newer and a Microsoft Visual C++ Compiler is required.

The sample demonstrates different techniques for opening a camera, e.g., by using its serial number or user device ID. It also contains an image-processing example and shows how to handle device disconnections.

The sample covers single and continuous image acquisition using software as well as hardware triggering.

Code#

When you click the Discover Cameras button, the MainDialog::on_scanButton_clicked() function is called, which in turn calls the MainDialog::EnumerateDevices() function to enumerate all attached devices.

By clicking the Open Selected button, the MainDialog::on_openSelected_1_clicked() or the MainDialog::on_openSelected_2_clicked() function is called, which in turn calls the CGuiCamera::Open() function to create a camera object and to open the selected camera. In addition, callback functions for parameter changes are registered, e.g., for Exposure Time, Gain, Pixel Format, etc.

Cameras can be opened by clicking the Open by SN (SN = serial number) or Open by User ID button. The latter assumes that you have already assigned a user ID to the camera, e.g., in the pylon Viewer or via the pylon API.

After a camera has been opened, the following GUI elements become available:

- Single Shot, Continuous Shot, Stop, and Execute (for executing a software trigger) buttons

- Exposure Time and Gain sliders

- Pixel Format, Trigger Mode, and Trigger Source drop-down lists

- Invert Pixels check box

By clicking the Single Shot button, the CGuiCamera::SingleGrab() function is called. To grab a single image, the StartGrabbing() function is called with the following arguments:

m_camera.StartGrabbing(1, Pylon::GrabStrategy_OneByOne, Pylon::GrabLoop_ProvidedByInstantCamera);

When the image is received, pylon calls the CGuiCamera::OnImageGrabbed() handler.

By clicking the Continuous Shot button, the CGuiCamera::ContinuousGrab() function is called. To grab images continuously, the StartGrabbing() function is called with the following arguments:

m_camera.StartGrabbing(Pylon::GrabStrategy_OneByOne, Pylon::GrabLoop_ProvidedByInstantCamera);

In this case, the camera grabs images until StopGrabbing() is called.

When a new image is received, pylon calls the CGuiCamera::OnImageGrabbed() handler.

This sample also demonstrates the triggering of cameras by using a software trigger. For this purpose, the Trigger Mode parameter has to be set to On, and the Trigger Source parameter has to be set to Software. When starting a single or a continuous image acquisition, the camera then waits for a software trigger.

By clicking the Execute button, the CGuiCamera::ExecuteSoftwareTrigger() function is called, which executes a software trigger.

For triggering the camera by hardware trigger, set Trigger Mode to On and Trigger Source to, e.g., Line1. When starting a single or a continuous image acquisition, the camera then waits for a hardware trigger.

By selecting the Invert Pixels check box, an example of image processing is shown. In the example, the pixel data is inverted. This is done in the CGuiCamera::OnImageGrabbed() function.

Finally, this sample also shows the use of device removal callbacks. If an already opened camera is disconnected, the CGuiCamera::OnCameraDeviceRemoved() function is called. In turn, the MainDialog::OnDeviceRemoved() function is called to inform the user about the disconnected camera.

Applicable Interfaces#

- GigE Vision

- USB3 Vision

- CoaXPress

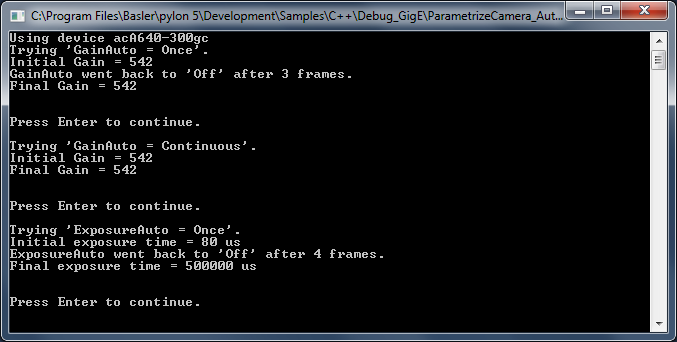

ParametrizeCamera_AutoFunctions#

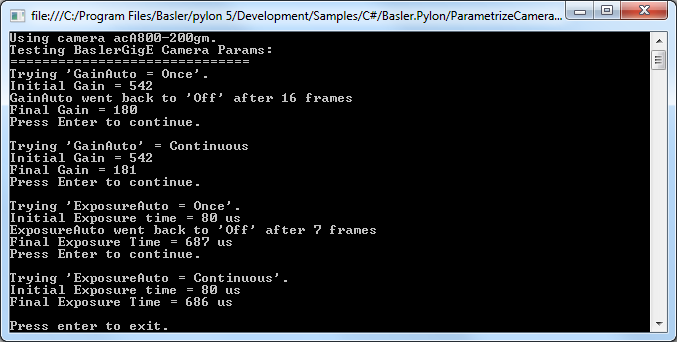

This sample demonstrates how to use the auto functions of Basler cameras, e.g., Gain Auto, Exposure Auto, and Balance White Auto (color cameras only).

Info

Different camera families implement different versions of the Standard Feature Naming Convention (SFNC). That's why the name and the type of the parameters used can be different.

Code#

Info

You can find the sample code here.

The CBaslerUniversalInstantCamera class is used to create a camera object with the first camera device found independent of its interface.

The CAcquireSingleFrameConfiguration class is used to register the standard event handler for configuring single frame acquisition. This overrides the default configuration as all event handlers are removed by setting the registration mode to RegistrationMode_ReplaceAll. Note that the camera device auto functions do not require grabbing by single frame acquisition. All available acquisition modes can be used.

The AutoGainOnce() and AutoGainContinuous() functions control brightness by using the Once and the Continuous modes of the Gain Auto auto function.

The AutoExposureOnce() and AutoExposureContinuous() functions control brightness by using the Once and the Continuous modes of the Exposure Auto auto function.

The CBaslerUniversalGrabResultPtr class is used to initialize a smart pointer that will receive the grab result data. The DisplayImage class is used to display the grabbed images.

Applicable Interfaces#

- GigE Vision

- USB3 Vision

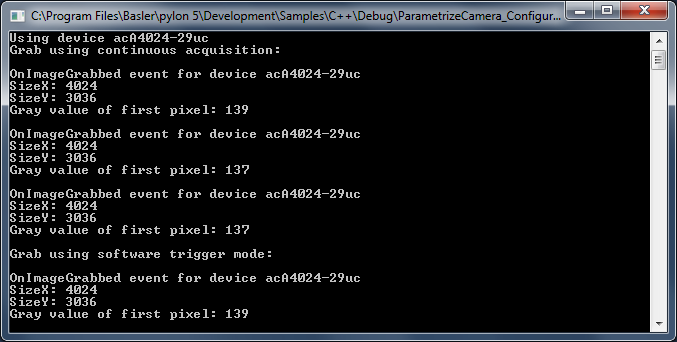

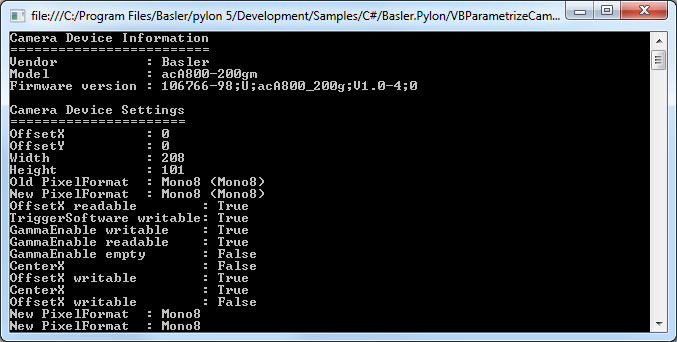

ParametrizeCamera_Configurations#

The Instant Camera class provides configuration event handlers to configure the camera and handle grab results. This is very useful for standard camera setups and image processing tasks.

This sample demonstrates how to use the existing configuration event handlers and how to register your own configuration event handlers.

Configuration event handlers are derived from the CConfigurationEventHandler base class. This class provides virtual methods that can be overridden. If the configuration event handler is registered, these methods are called when the state of the Instant Camera object changes, e.g., when the camera object is opened or closed.

The standard configuration event handler provides an implementation for the OnOpened() method that parametrizes the camera.

To override Basler's implementation, create your own handler and attach it to CConfigurationEventHandler.

Device-specific camera classes, e.g., for GigE cameras, provide specialized event handler base classes, e.g., CBaslerGigEConfigurationEventHandler.

Code#

Info

You can find the sample code here.

The CInstantCamera class is used to create an Instant Camera object with the first camera device found.

The CImageEventPrinter class is used to output details about the grabbed images.

The CGrabResultPtr class is used to initialize a smart pointer that receives the grab result data. It controls the reuse and lifetime of the referenced grab result. When all smart pointers referencing a grab result go out of scope, the referenced grab result is reused or destroyed. The grab result is still valid after the camera object it originated from has been destroyed.

The CAcquireContinuousConfiguration class is the default configuration of the Instant Camera class. It is automatically registered when an Instant Camera object is created. This Instant Camera configuration is provided as header-only file. The code can be copied and modified to create your own configuration classes.

In this sample, the standard configuration event handler is registered for configuring the camera for continuous acquisition. By setting the registration mode to RegistrationMode_ReplaceAll, the new configuration handler replaces the default configuration handler that has been automatically registered when creating the Instant Camera object. The handler is automatically deleted when deregistered or when the registry is cleared if Cleanup_Delete is specified.

The CSoftwareTriggerConfiguration class is used to register the standard configuration event handler for enabling software triggering. This Instant Camera configuration is provided as header-only file. The code can be copied and modified to create your own configuration classes, e.g., to enable hardware triggering. The software trigger configuration handler replaces the default configuration.

The CAcquireSingleFrameConfiguration class is used to register the standard event handler for configuring single frame acquisition. This overrides the default configuration as all event handlers are removed by setting the registration mode to RegistrationMode_ReplaceAll.

The CPixelFormatAndAoiConfiguration class is used to register an additional configuration handler to set the image format and adjust the image ROI. This Instant Camera configuration is provided as header-only file. The code can be copied and modified to create your own configuration classes.

By setting the registration mode to RegistrationMode_Append, the configuration handler is added instead of replacing the configuration handler already registered.

Applicable Interfaces#

- GigE Vision

- USB3 Vision

- CXP

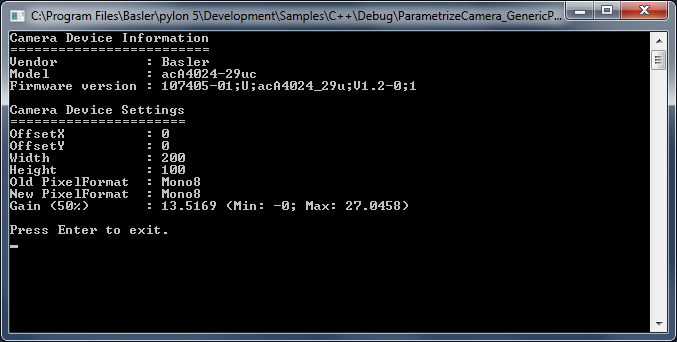

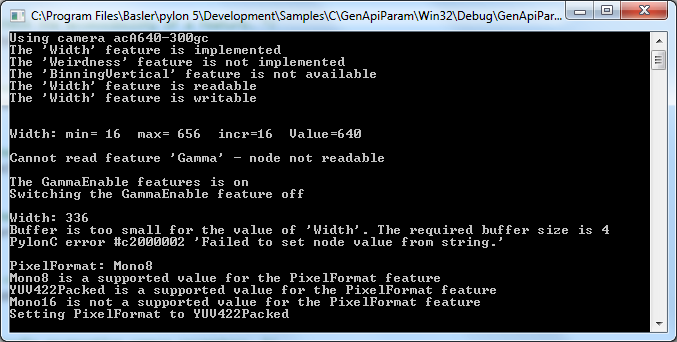

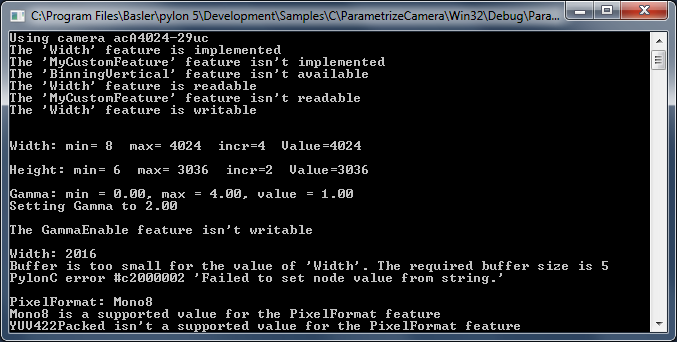

ParametrizeCamera_GenericParameterAccess#

This sample illustrates how to read and write different camera parameter types.

For camera configuration and for accessing other parameters, the pylon API uses the technologies defined by the GenICam standard. The standard also defines a format for camera description files.

These files describe the configuration interface of GenICam-compliant cameras. The description files are written in XML and describe camera registers, their interdependencies, and all other information needed to access high-level features. This includes features such as Gain, Exposure Time, or Pixel Format. The features are accessed by means of low level register read and write operations.

The elements of a camera description file are represented as parameter objects. For example, a parameter object can represent a single camera register, a camera parameter such as Gain, or a set of parameter values. Each node implements the GenApi::INode interface.

The nodes are linked together by different relationships as explained in the GenICam standard document. The complete set of nodes is stored in a data structure called a node map. At runtime, the node map is instantiated from an XML description file.

This sample shows the generic approach for configuring a camera using the GenApi node maps represented by the GenApi::INodeMap interface. The names and types of the parameter nodes can be found in the pylon API Documentation section and by using the pylon Viewer tool.

See also the ParametrizeCamera_NativeParameterAccess sample for the native approach for configuring a camera.

Code#

Info

You can find the sample code here.

The CInstantCamera class is used to create an Instant Camera object with the first camera device found.

The INodeMap interface is used to access the feature node map of the camera device. It provides access to all features supported by the camera.

CIntegerPtr is a smart pointer for the IInteger interface pointer. It is used to access camera features of the int64_t type, e.g., image ROI (region of interest).

CEnumerationPtr is a smart pointer for the IEnumeration interface pointer. It is used to access camera features of the enumeration type, e.g., Pixel Format.

CFloatPtr is a smart pointer for the IFloat interface pointer. It is used to access camera features of the float type, e.g., Gain (only on camera devices compliant with SFNC version 2.0).

Applicable Interfaces#

- GigE Vision

- USB3 Vision

- Camera Link

- CXP

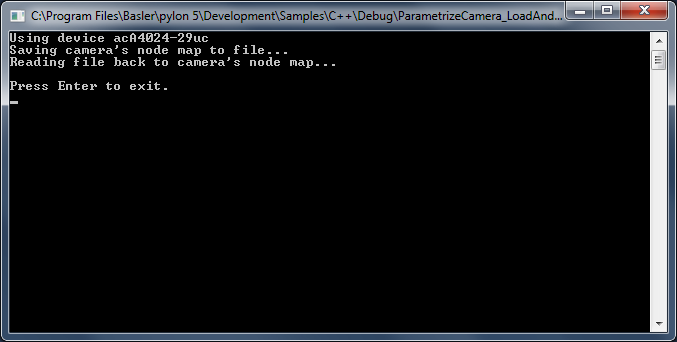

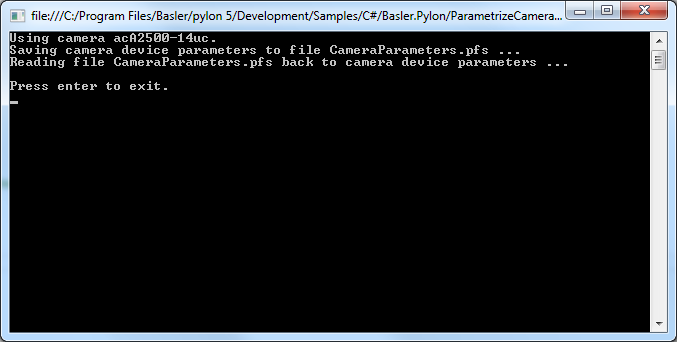

ParametrizeCamera_LoadAndSave#

This sample application demonstrates how to save or load the features of a camera to or from a file.

Code#

Info

You can find the sample code here.

The CInstantCamera class is used to create an Instant Camera object with the first camera device found.

The CFeaturePersistence class is a pylon utility class for saving and restoring camera features to and from a file or string.

Info

When saving features, the behavior of cameras supporting sequencers depends on the current setting of the "SequenceEnable" (some GigE models) or "SequencerConfigurationMode" (USB only) features respectively. The sequence sets are only exported, if the sequencer is in configuration mode. Otherwise, the camera features are exported without sequence sets.

Applicable Interfaces#

- GigE Vision

- USB3 Vision

- Camera Link

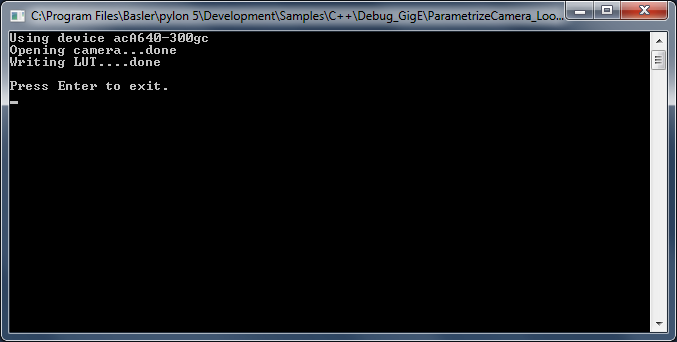

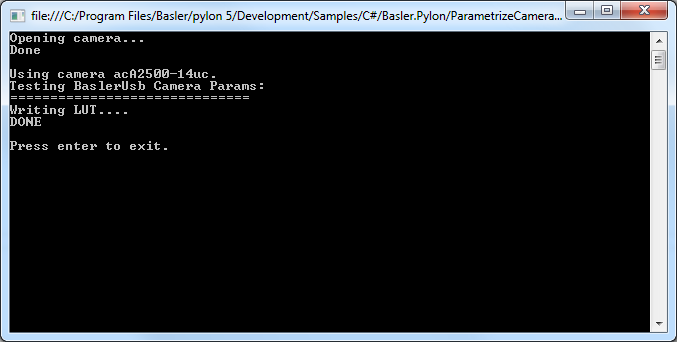

ParametrizeCamera_LookupTable#

This sample demonstrates the use of the Luminance Lookup Table feature independent of the camera interface.

Code#

Info

You can find the sample code here.

The CBaslerUniversalInstantCamera class is used to create a camera object with the first camera device found independent of its interface.

The camera feature LUTSelector is used to select the lookup table. As some cameras have 10-bit and others have 12-bit lookup tables, the type of the lookup table for the current device must be determined first. The LUTIndex and LUTValue parameters are used to access the lookup table values. This sample demonstrates how the lookup table can be used to cause an inversion of the sensor values.

Applicable Interfaces#

- GigE Vision

- USB3 Vision

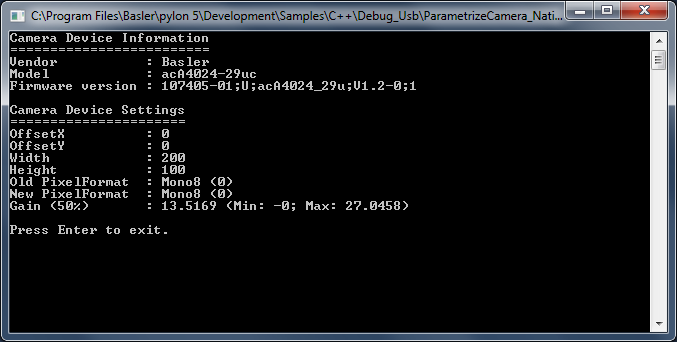

ParametrizeCamera_NativeParameterAccess#

This sample shows the native approach for configuring a camera using device-specific Instant Camera classes. See also the ParametrizeCamera_GenericParameterAccess sample for the generic approach for configuring a camera.

For camera configuration and for accessing other parameters, the pylon API uses the technologies defined by the GenICam standard. The standard also defines a format for camera description files.

These files describe the configuration interface of GenICam-compliant cameras. The description files are written in XML and describe camera registers, their interdependencies, and all other information needed to access high-level features. This includes features such as Gain, Exposure Time, or Pixel Format. The features are accessed by means of low level register read and write operations.

The elements of a camera description file are represented as parameter objects. For example, a parameter object can represent a single camera register, a camera parameter such as Gain, or a set of parameter values. Each node implements the GenApi::INode interface.

Using the code generators provided by GenICam's GenApi module, a programming interface is created from a camera description file. This provides a function for each parameter that is available for the camera device. The programming interface is exported by the device-specific Instant Camera classes. This is the easiest way to access parameters.

Code#

Info

You can find the sample code here.

The CBaslerUniversalInstantCamera class is used to create a camera object with the first camera device found independent of its interface.

This sample demonstrates the use of camera features of the IInteger type, e.g., Width, Height, GainRaw (available on camera devices compliant with SFNC versions before 2.0), of the IEnumeration type, e.g., Pixel Format, or of the IFloat type, e.g., Gain (available on camera devices compliant with SFNC version 2.0).

Applicable Interfaces#

- GigE Vision

- USB3 Vision

- Camera Link

- CXP

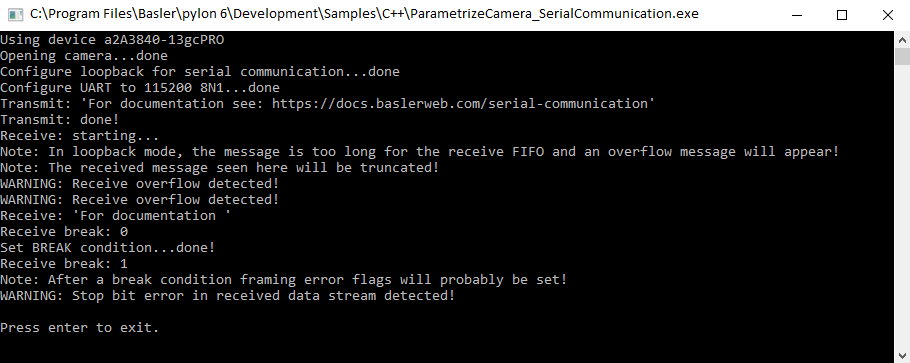

ParametrizeCamera_SerialCommunication#

This sample demonstrates the use of the Serial Communication feature (UART) supported by ace 2 Pro cameras. This feature allows you to establish serial communication between a host and an external device through the camera's I/O lines. For more information, see the Serial Communication feature topic.

Code#

Info

You can find the sample code here.

The CBaslerUniversalInstantCamera class is used to create a camera object with the first camera found. Make sure to use an ace 2 Pro camera that supports the serial communication feature. Otherwise, an exception will be returned when trying to access and configure the camera's I/O lines.

To test the serial communication without having an external device connected to the camera, or to rule out errors caused by the external device, you can configure a loopback mode on the camera. This is done by setting the BslSerialRxSource parameter to SerialTx.

In this case, the serial input is connected to the serial output internally, so the camera receives exactly what it transmits.

To configure the serial communication between the camera and an external device, the GPIO Line 2 (SerialTx) and GPIO Line 3 (BslSerialRxSource) must be configured accordingly. Make sure not to use the opto-coupled I/O lines for UART communications.

In addition, depending on the configuration of the external device, the camera's baud rate (BslSerialBaudRate), the number of data bits (BslSerialNumberOfDataBits), the number of stop bits (BslSerialNumberOfStopBits), and the kind of parity check (BslSerialParity) must be configured.

After the serial communication has been configured, you can send data to the external device, via the SerialTransmit() function, and receive data from it, via the SerialReceive() function.

Applicable Interfaces#

- GigE Vision

- USB3 Vision

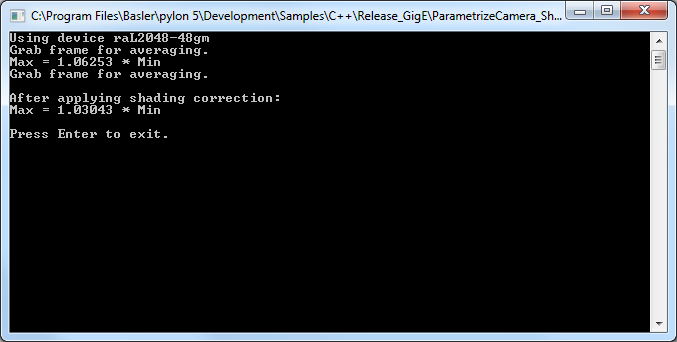

ParametrizeCamera_Shading#

This sample demonstrates how to calculate and upload gain shading sets to Basler racer and Basler runner line scan GigE Vision cameras.

Code#

Info

You can find the sample code here.

The CDeviceInfo class is used to look for cameras with a specific interface, e.g., GigE Vision only (BaslerGigEDeviceClass).

The CBaslerUniversalInstantCamera class is used to create a camera object with the first GigE camera found.

The CAcquireSingleFrameConfiguration class is used to register the standard event handler for configuring single frame acquisition. This overrides the default configuration as all event handlers are removed by setting the registration mode to RegistrationMode_ReplaceAll.

CreateShadingData() assumes that the conditions for exposure (illumination, exposure time, etc.) have been set up to deliver images of uniform intensity (gray value), but that the acquired images are not uniform. The gain shading data is calculated so that the observed non-uniformity will be compensated when the data is applied. The data is saved in a local file.

UploadFile() transfers the calculated gain shading data from the local file to the camera.

CheckShadingData() tests to what extent the non-uniformity has been compensated.

Applicable Interfaces#

- GigE Vision

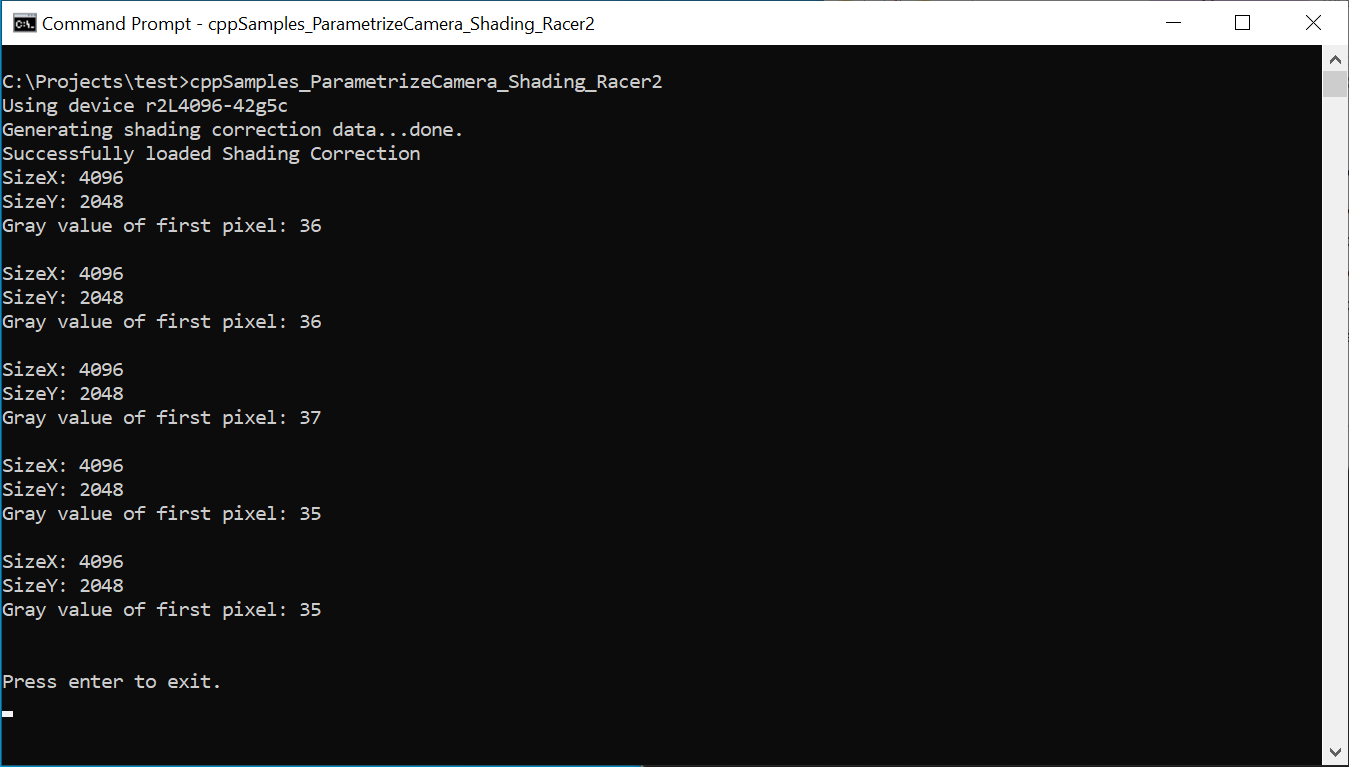

ParametrizeCamera_Shading_Racer2#

This sample demonstrates how to create and use shading sets with Basler racer 2 line scan cameras as this has become much easier compared to racer cameras.

Attention

Executing this sample will overwrite all current settings in user shading set 1.

Code#

Info

You can find the sample code here.

The CBaslerUniversalInstantCamera class is used to create a camera object with the first camera found. This object is then used to ensure that it is a line scan camera that supports the racer 2 type of shading correction.

The BslShadingCorrectionSelector, BslShadingCorrectionMode, and BslShadingCorrectionSetIndex camera parameters are used to select the type of shading correction and the storage location of the correction data.

Once image capture has been started, the BslShadingCorrectionSetCreate camera parameter can be used to start the automatic generation of the shading data.

The BslShadingCorrectionSetCreateStatus and BslShadingCorrectionSetStatus parameters can then be used to check whether the generation of the data has been completed and was successful.

Once the shading data has been generated, it is used immediately to correct subsequent image captures.

Applicable Interfaces#

- GigE Vision

- CXP

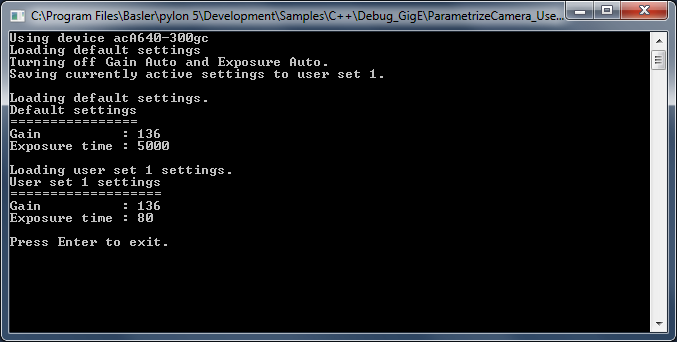

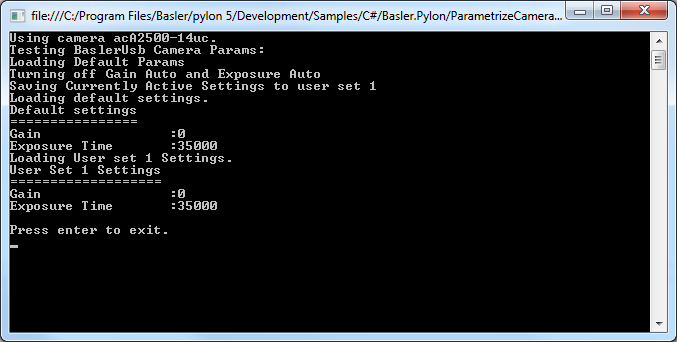

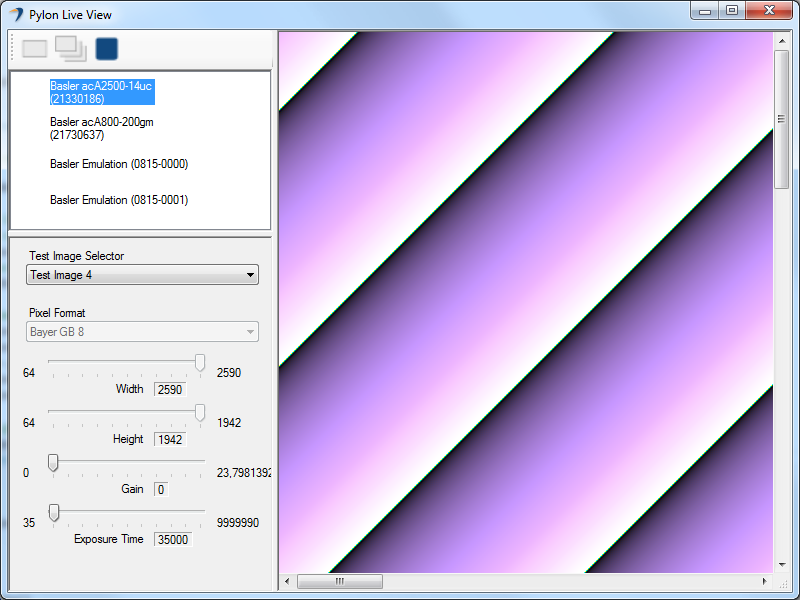

ParametrizeCamera_UserSets#

This sample demonstrates how to use user configuration sets (user sets) and how to configure the camera to start up with the user-defined settings of user set 1.

You can also use the pylon Viewer to configure your camera and store custom settings in a user set of your choice.

Info

Different camera families implement different versions of the Standard Feature Naming Convention (SFNC). That's why the name and the type of the parameters used can be different.

Attention

Executing this sample will overwrite all current settings in user set 1.

Code#

Info

You can find the sample code here.

The CBaslerUniversalInstantCamera class is used to create a camera object with the first camera device found independent of its interface.

The camera parameters UserSetSelector, UserSetLoad, UserSetSave, and UserSetDefaultSelector are used to demonstrate the use of user configuration sets (user sets) and how to configure the camera to start up with user-defined settings.

Applicable Interfaces#

- GigE Vision

- USB3 Vision

- Camera Link

- CXP

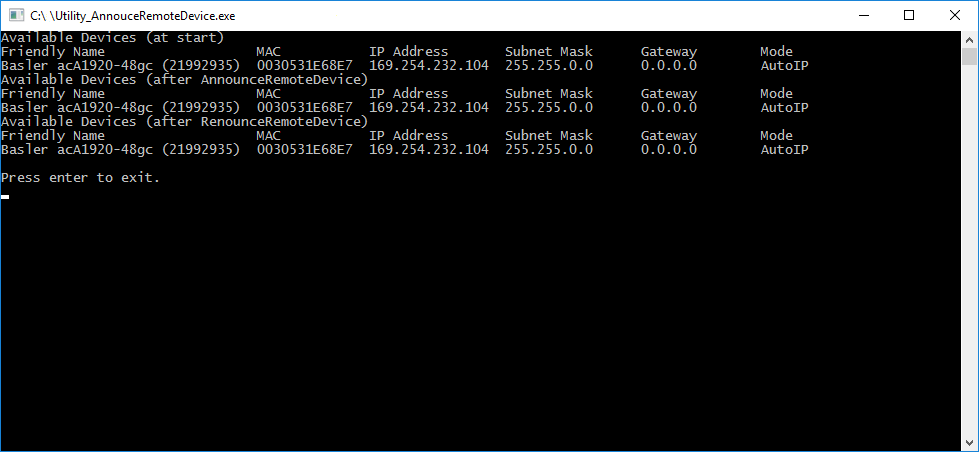

Utility_ChangeApplet#

This sample shows two different methods for changing an applet on a Basler CXP frame grabber. An applet reconfigures the on-board logic, e.g., switching from a monochrome stream to a Bayer-demosaicing pipeline. It only becomes active after all devices using the applet have been closed and the hardware has been enumerated again.

Configuring the Camera#

- Open the CXP interface (

BaslerGenTlCxpDeviceClass) withCUniversalInstantInterface. - Access the interface node map and read the current value of

InterfaceAppletandInterfaceAppletStatus. - List available applets and pick the first one that differs from the current setting.

- Method 1 - Refresh device list:

- Close all devices.

- Call

EnumerateDevices. - Check that

InterfaceAppletStatusreports Loaded.

- Method 2 - Restart pylon runtime:

- Terminate pylon completely (

PylonTerminate()). - Re‑initialize pylon (

PylonInitialize()). - Reopen the interface, and verify the status.

- Terminate pylon completely (

- Method 1 - Refresh device list:

Both approaches end with the desired applet loaded and ready for use.

Key Transport Layer Parameters#

| Node | Purpose |

|---|---|

| InterfaceApplet | Sets the desired applet. |

| InterfaceAppletStatus | Reports the current load state, e.g., Loaded, Loading, Error. |

Applicable Interfaces#

- CXP

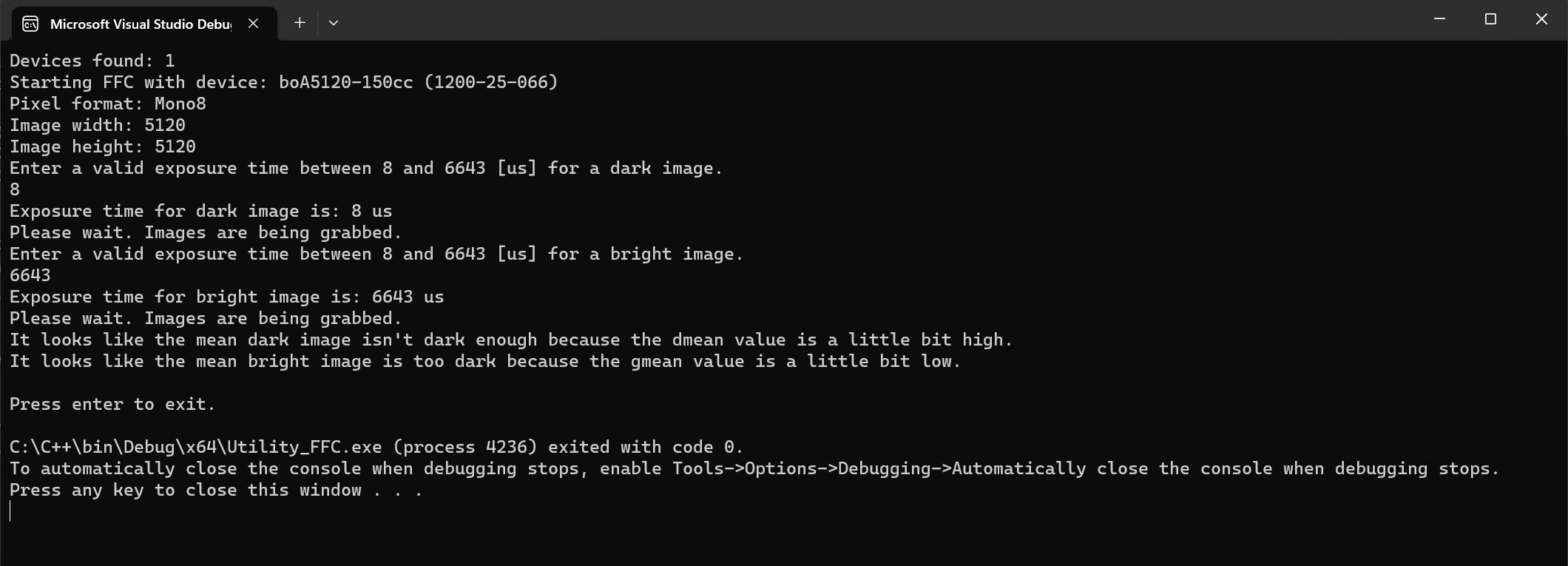

Utility_FFC#

This sample demonstrates how to configure and use the Flat-Field Correction (FFC) feature of Basler boost V CXP-12 cameras.

Flat-field correction is used to eliminate differences in the brightness of pixels. The process consists of two steps:

- In step 1, sequences of dark field and bright field (flat field) images are acquired to detect dark signal non-uniformities (DSNU), e.g., dark current noise, and photo response non-uniformities (PRNU) respectively.

- In step 2, correction values are determined and uploaded to the camera.

For more information, see the Flat-Field Correction feature topic.

Code#

The findBoostCam() function is used to filter out any cameras that don't support the Flat-Field Correction feature.

In the main() method, the camera's Width, Height, PixelFormat, and ExposureTime parameters are configured for optimum results.

The processImages() function is used to grab a specified number of images. The gray values of all pixels in these images are then added up and divided by the number of images to create an average image. Next, the average gray value of each column of the average image is calculated. This forms the basis for calculating the DSNU and PRNU correction values. This is done for both dark field and flat field images.

Once the correction values for DSNU and PRNU have been calculated, they are transferred to the camera's flash memory. With this, the camera can perform flat-field correction in real-time by itself.

Applicable Interfaces#

- CXP (boost V)

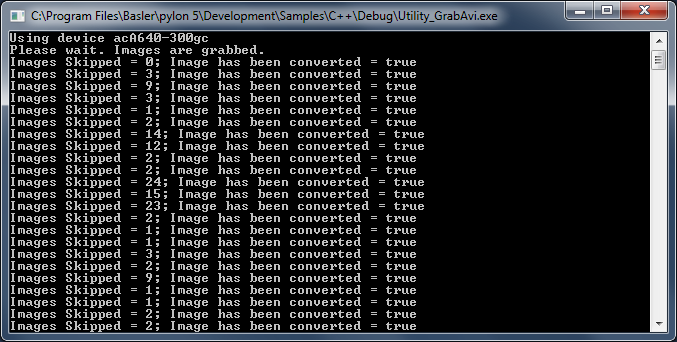

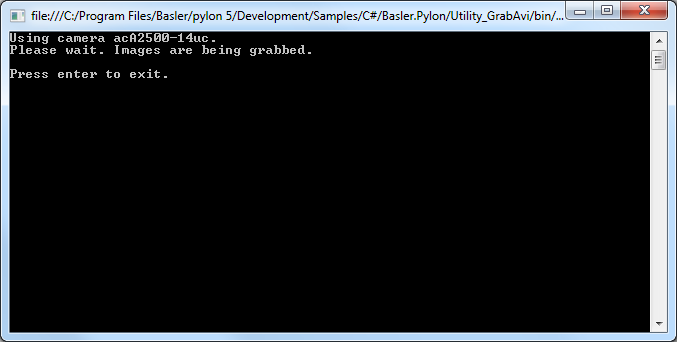

Utility_GrabAvi#

This sample demonstrates how to create a video file in Audio Video Interleave (AVI) format on Windows operating systems only.

Info

AVI is best for recording high-quality lossless videos because it allows you to record without compression. The disadvantage is that the file size is limited to 2 GB. Once that threshold is reached, the recording stops and an error message is displayed.

Code#

Info

You can find the sample code here.

The CAviWriter class is used to create an AVI writer object. The writer object takes the following arguments: file name, playback frame rate, pixel output format, width and height of the image, vertical orientation of the image data, and compression options (optional).

StartGrabbing() demonstrates how to start the grabbing by applying the GrabStrategy_LatestImages grab strategy. Using this strategy is recommended when images have to be recorded.

The CInstantCamera class is used to create an Instant Camera object with the first camera device found.

The CGrabResultPtr class is used to initialize a smart pointer that will receive the grab result data. It controls the reuse and lifetime of the referenced grab result. When all smart pointers referencing a grab result go out of scope, the referenced grab result is reused or destroyed. The grab result is still valid after the camera object it originated from has been destroyed.

The DisplayImage class is used to display the grabbed images.

Add() converts the grabbed image to the correct format, if required, and adds it to the AVI file.

Applicable Interfaces#

- GigE Vision

- USB3 Vision

- CXP

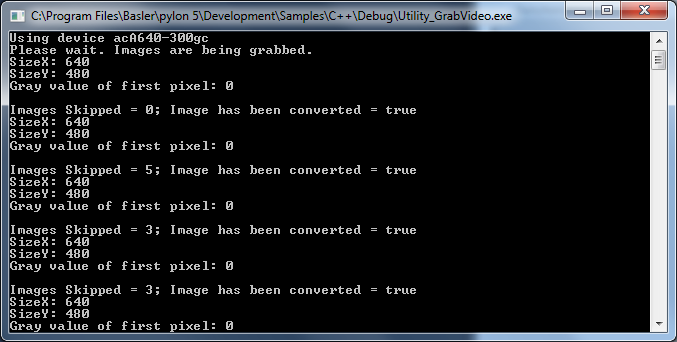

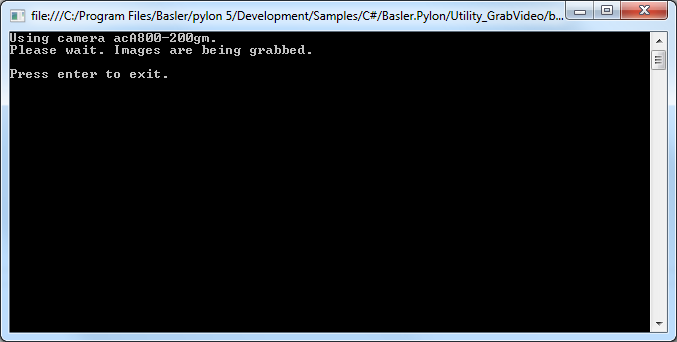

Utility_GrabVideo#

This sample demonstrates how to create a video file in MP4 format. It is presumed that the pylon Supplementary Package for MPEG-4 is already installed.

Info

There are no file size restrictions when recording MP4 videos. However, the MP4 format always compresses data to a certain extent, which results in loss of detail.

Code#

Info

You can find the sample code here.

The CVideoWriter class is used to create a video writer object. Before opening the video writer object, it is initialized with the current parameter values of the ROI width and height, the pixel output format, the playback frame rate, and the quality of compression.

StartGrabbing() demonstrates how to start the grabbing by applying the GrabStrategy_LatestImages grab strategy. Using this strategy is recommended when images have to be recorded.

The CInstantCamera class is used to create an Instant Camera object with the first camera device found.

The CGrabResultPtr class is used to initialize a smart pointer that will receive the grab result data. It controls the reuse and lifetime of the referenced grab result. When all smart pointers referencing a grab result go out of scope, the referenced grab result is reused or destroyed. The grab result is still valid after the camera object it originated from has been destroyed.

The DisplayImage class is used to display the grabbed images.

Add() converts the grabbed image to the correct format, if required, and adds it to the video file.

Applicable Interfaces#

- GigE Vision

- USB3 Vision

- CXP

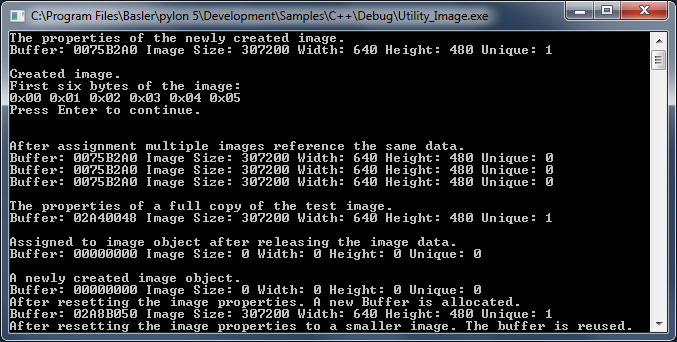

Utility_Image#

This sample demonstrates how to use the pylon image classes CPylonImage and CPylonBitmapImage.

CPylonImage supports handling image buffers of the various existing pixel types.

CPylonBitmapImage can be used to easily create Windows bitmaps for displaying images. In additional, there are two image class-related interfaces in pylon (IImage and IReusableImage).

IImage can be used to access image properties and the image buffer.

The IReusableImage interface extends the IImage interface to be able to reuse the resources of the image to represent a different image.

Both CPylonImage and CPylonBitmapImage implement the IReusableImage interface.

The CGrabResultPtr grab result class provides a cast operator to the IImage interface. This makes using the grab result together with the image classes easier.

Code#

Info

You can find the sample code here.

The CPylonImage class describes an image. It takes care of the following:

- Automatically manages size and lifetime of the image.

- Allows taking over a grab result to prevent its reuse as long as required.

- Allows connecting user buffers or buffers provided by third-party software packages.

- Provides methods for loading and saving an image in different file formats.

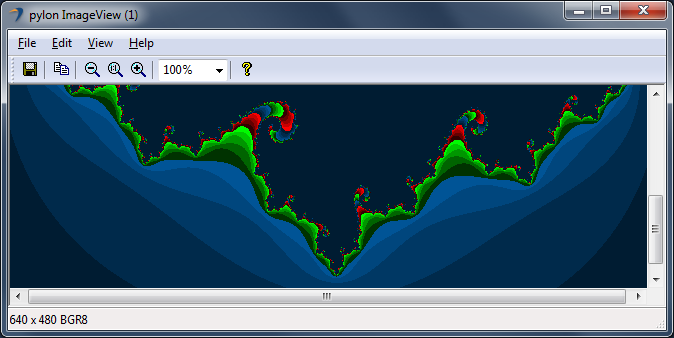

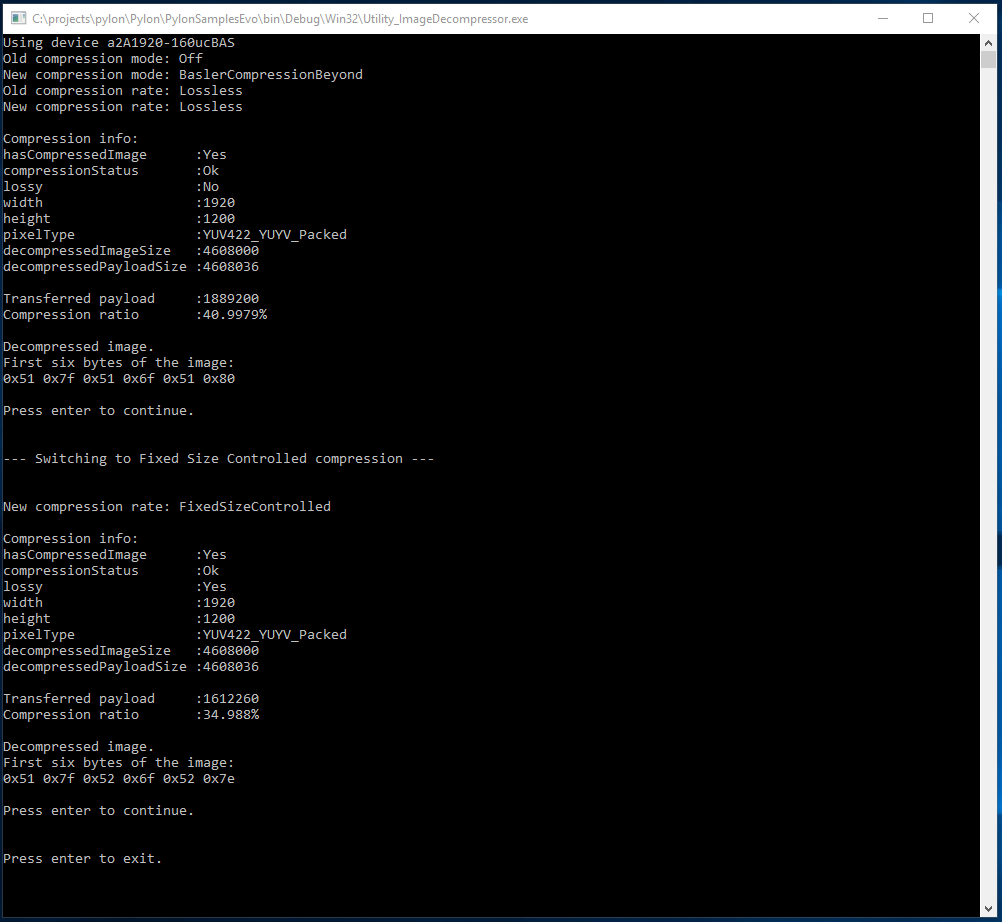

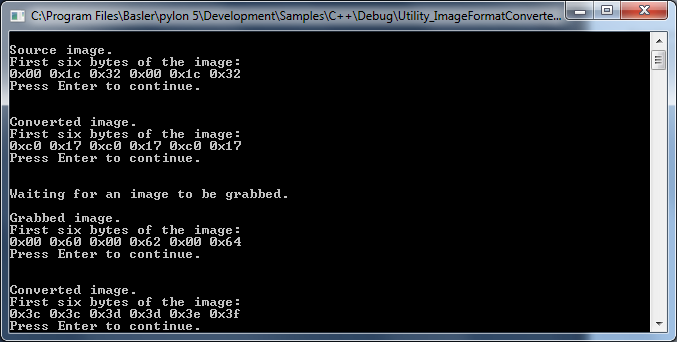

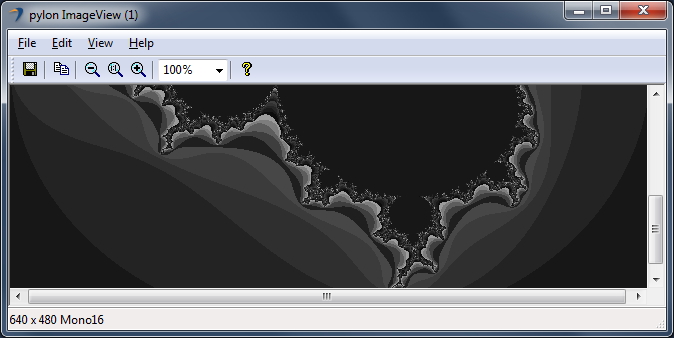

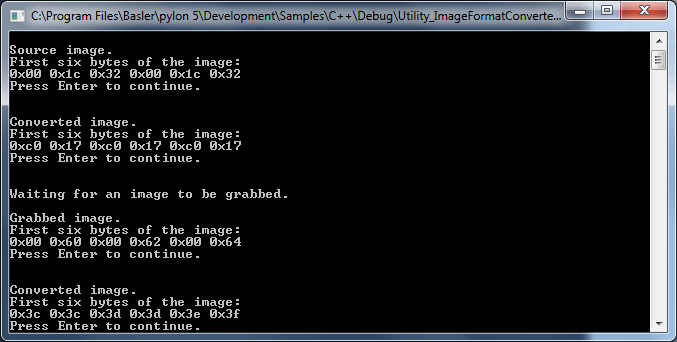

- Serves as the main target format for the